SoundScape Interaction (2015)

The SoundScape Interaction project was a 4 month adventure of developments on room acoustics, in collaboration with the Imperial College London and Bang & Olufsen. The idea was to propose a VR game where one could experience the impact of a room on sound propagation. Visual scenes were created and rendered in Blender and its game engine, explored through an Oculus DK2 thanks to BlenderVR. Room acoustic was simulated in Max/MSP, part of the patch being based on IRCAM’s Spat rendering engine. I’ve learnt a lot during the developments of this project, and would like to thank once more the researchers from B&O and the ICL for taking me in.

Demo Reel

Assets description

During the experiment, participants where exposed to different room acoustics. They were at first asked to indicate whether the acoustic matched the room they were in, then were to indicate when they perceived a change in the room acoustic while switching between predefined reverberation time (RT60) values (Just Noticeable Difference assessment).

Room 0: no reverb, used as introduction scene

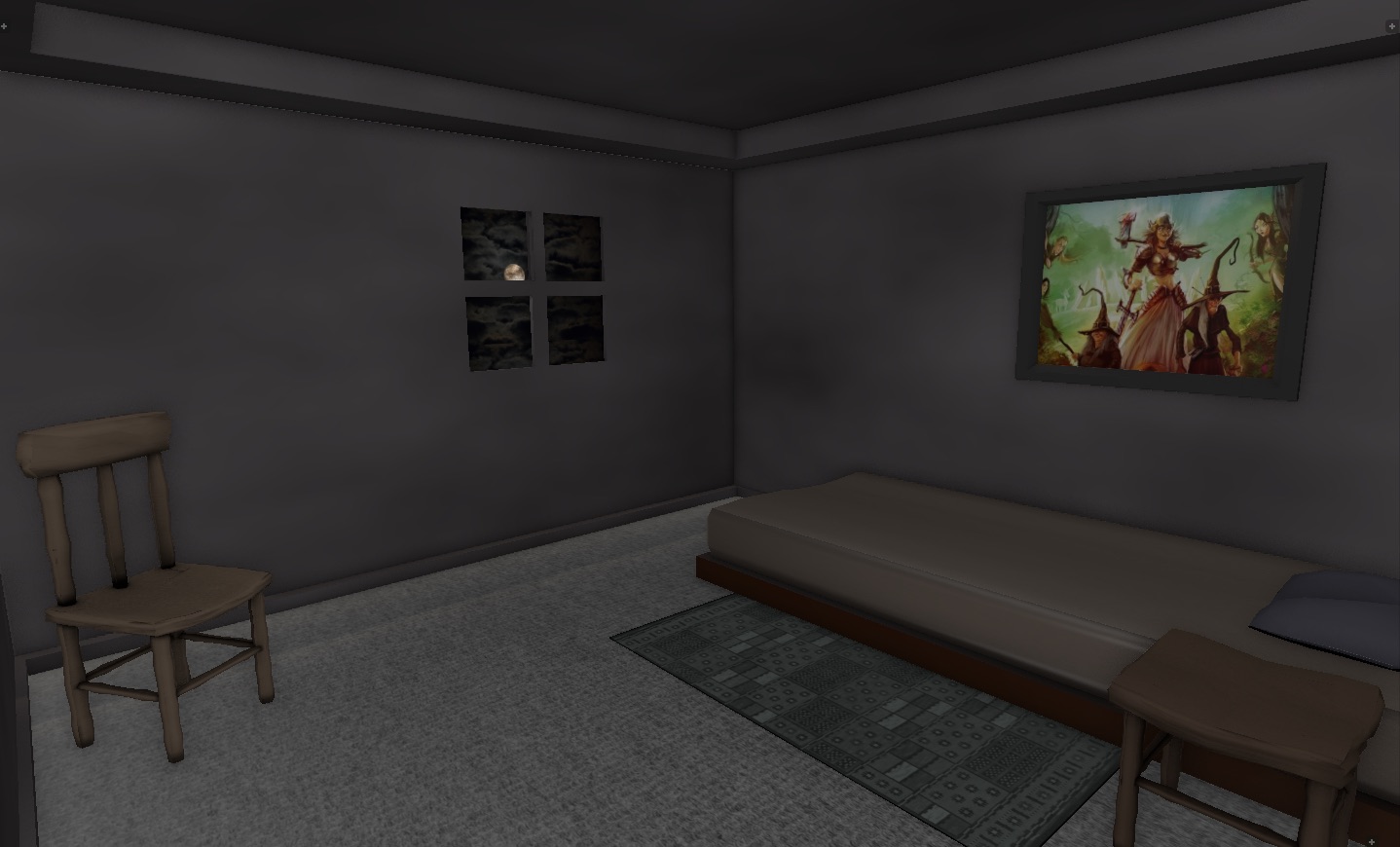

Room 1: Bedroom-like reverb

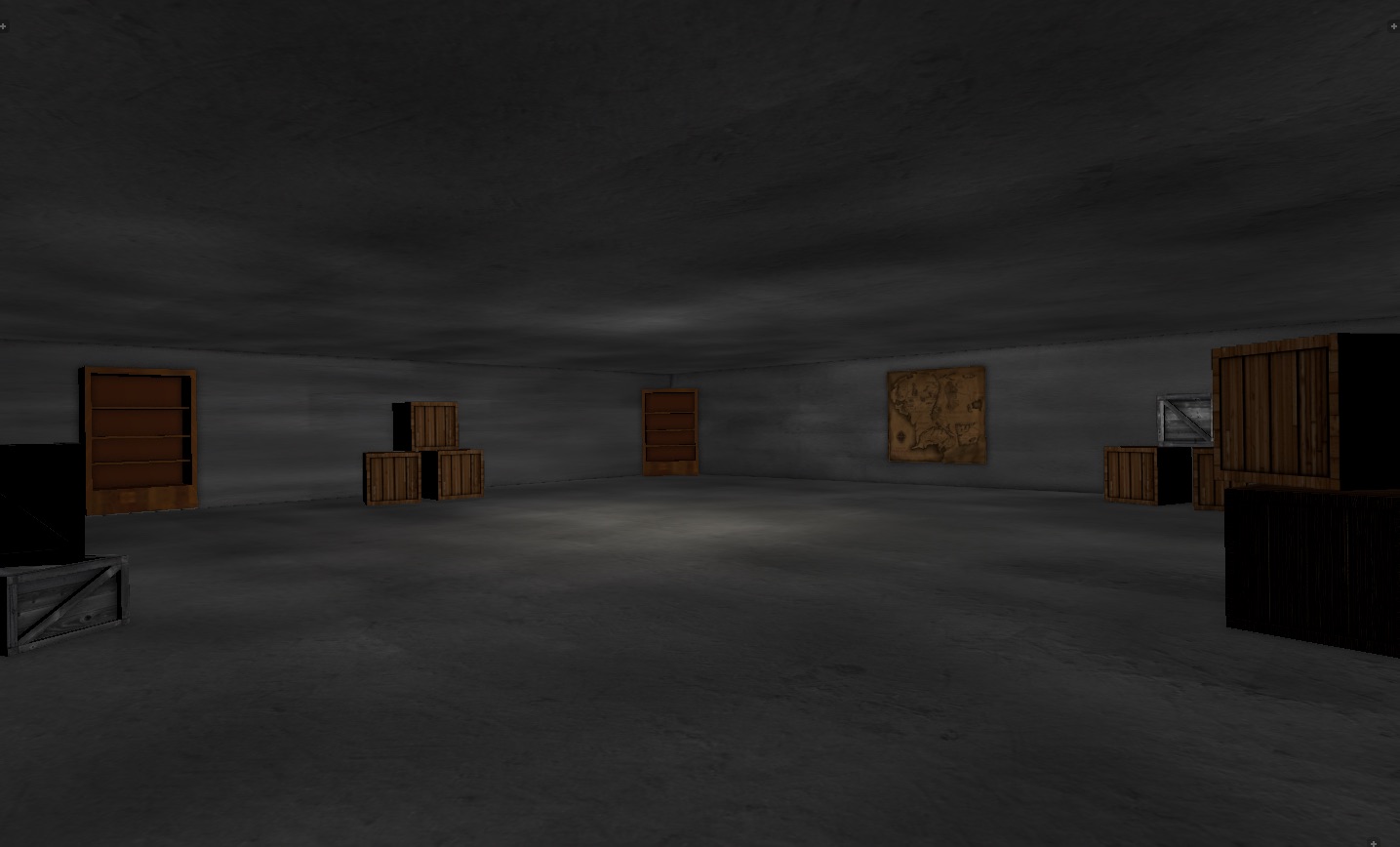

Room 2: Garage-like reverb

Room 3: Church-like reverb

Room 3: Improved version (used in the Ghost Orchestra Project)

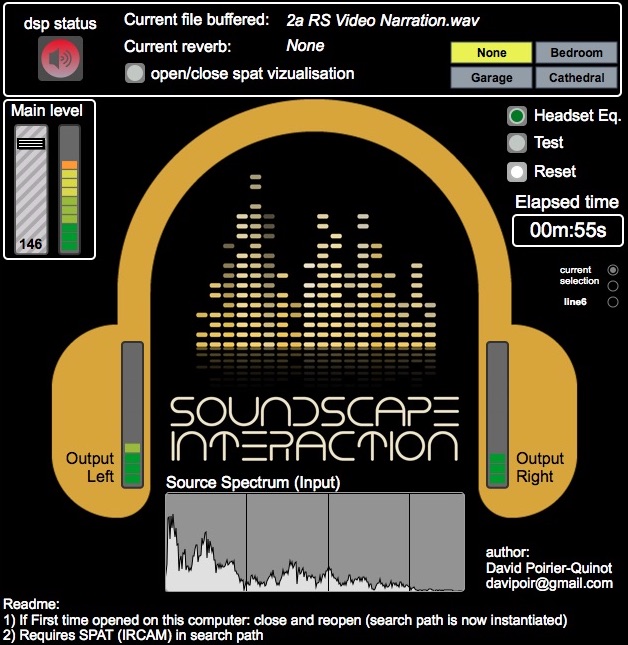

Audio Engine (in Cycling'74 Max)