3D Tune-In (2018)

3D Tune-In was a H2020 European project, coordinated at the Imperial College London, on the use of 3D sound and gamification techniques to support people using hearing aids. The objective was to help users better exploit their hearing aids as well as to enable non-wearers to experiment how hearing loss can impact everyday life.

Project introduction video

My first contribution to the project was to work on understanding and simulating the impact of various hearing loss conditions on the perception of an auditory scene. We used these research to develop the 3D Tune-In audio engine, a binaural encoder capable of simulating both the effect of various hearing loss conditions and how hearing aids help mitigate these effects. The engine can downloaded from the 3D Tune-In website, available as either a VST, a C++ SDK or a Unity plug-in.

Snapshot of the 3D Tune-In audio engine GUI

Room acoustic and Ambisonic encoding order

To propose realistic scenarios for the evaluation of hearing aids features, we implemented room acoustics support in the audio engine (based on room impulse response convolutions). As the intent was to have the engine running on all types of platforms (mobile, web, etc.), we spent some time investigating how to achieve the best perceivable rendering for a minimum CPU budget. As part of these investigations, we conducted a series of perceptual experiments to assess the impact of room acoustics rendering “resolution” on our perception of an auditory scene. The examples below are from one of these studies where we compared the perceived quality of room acoustics generated using either mono, stereo, or Ambisonic (1st, 2nd, 3rd order) impulse responses. Footsteps in these scenes have been created using Charles Verron's SPAD synthesizer.

Anechoic scene (original)

Stereo RIR

Ambisonic 3rd order RIR

Experiment on HRTF selection and binaural localization

Using the 3D Tune-In toolkit, we developed a short VR game for HRTF selection. The concept was quite simple (if not new): you hear a sound, point towards the direction you think it came from, and get to live another round if you're right. Based on your results, the game would suggest the HRTF set (understand audio profile) with which you have the most affinities (localization wise).

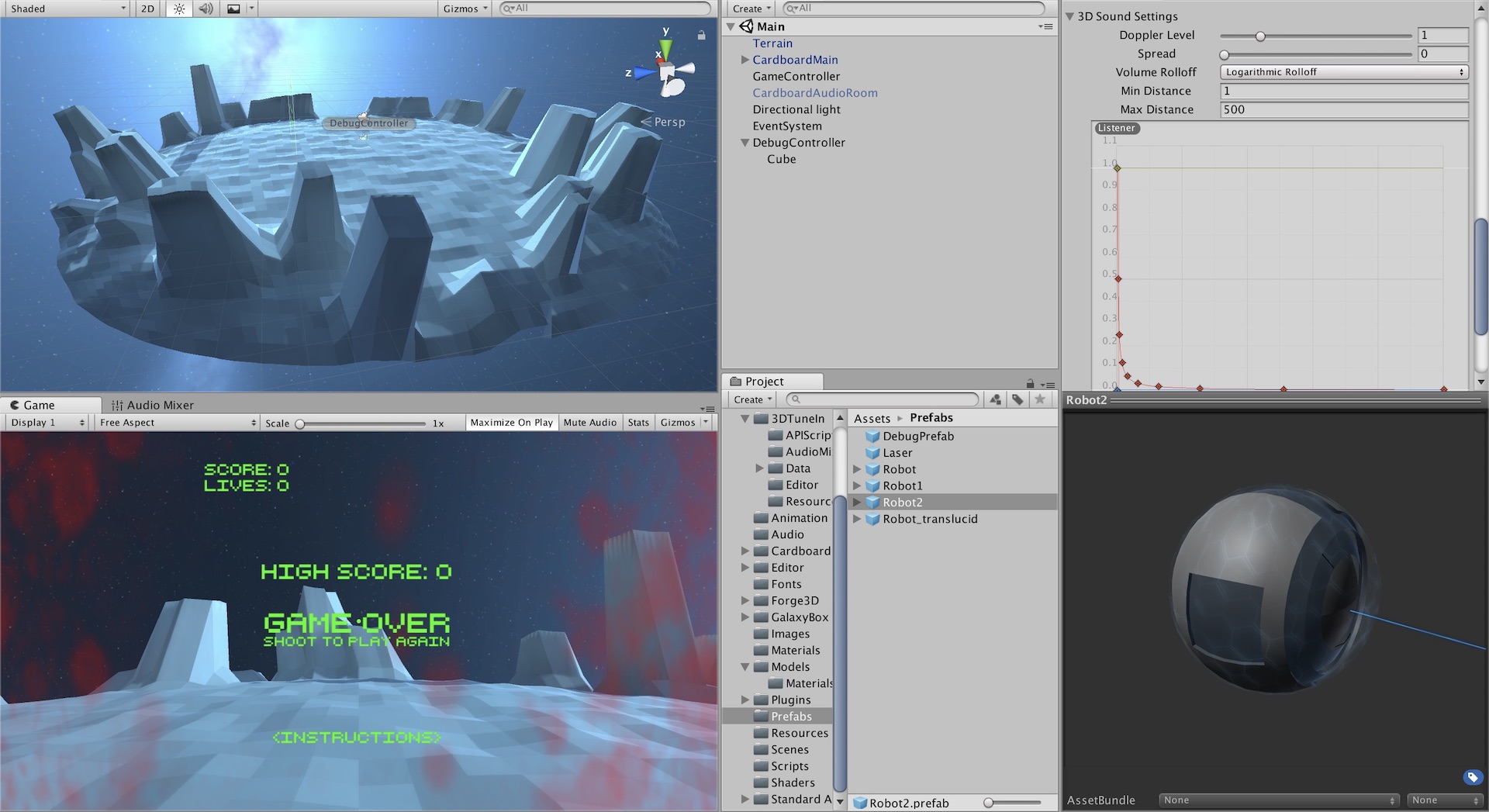

HRTF selection game: Unity editor