Rasputin (2018 - 2023)

The goal of the Rasputin project was to enable blind people to pre-explore places in virtual reality. Much like google maps for interior spaces, the virtual auditory exploration would help to create mental maps used to orientate oneself during navigation in the real building.

Measuring the test scenarios

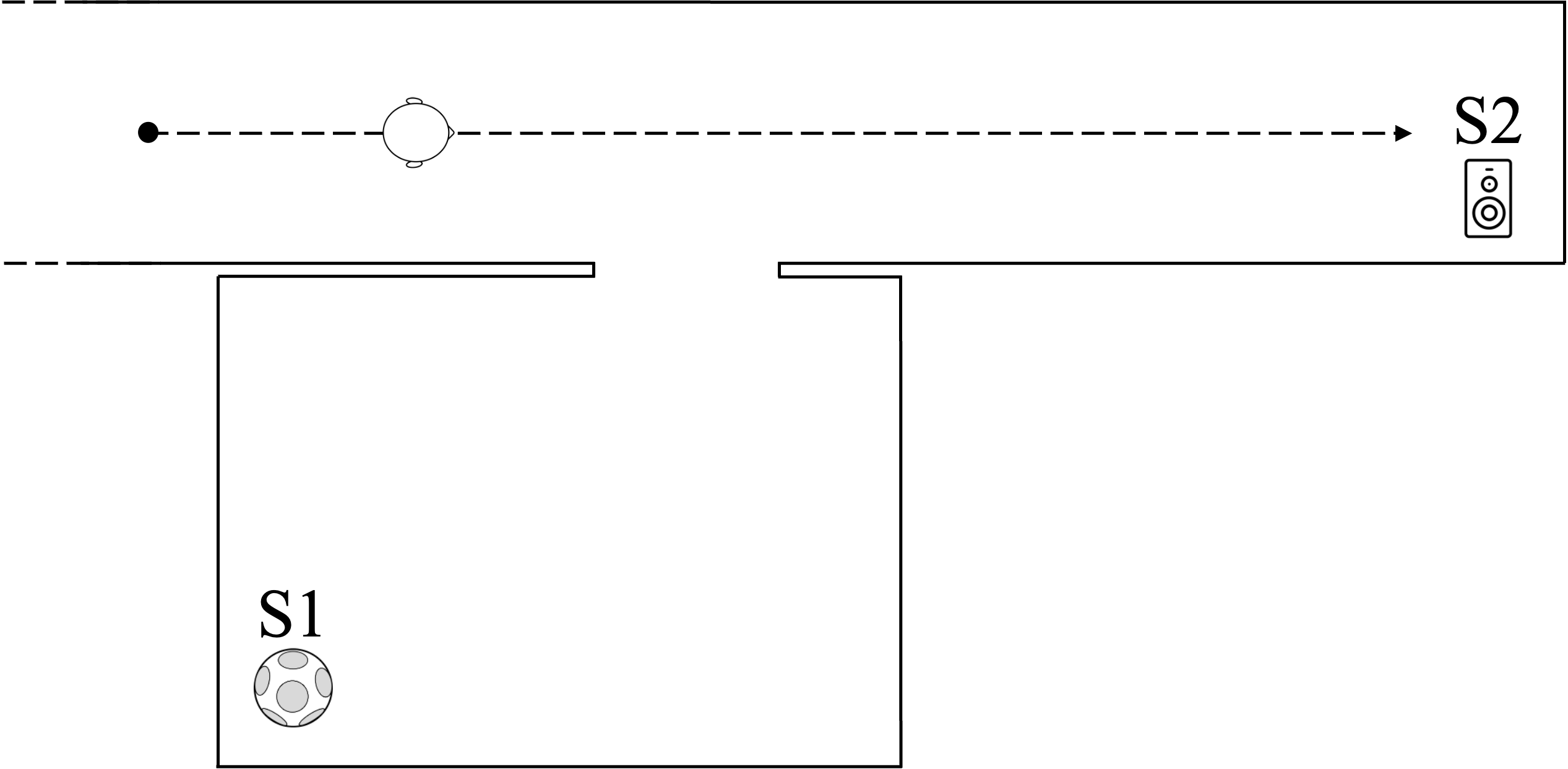

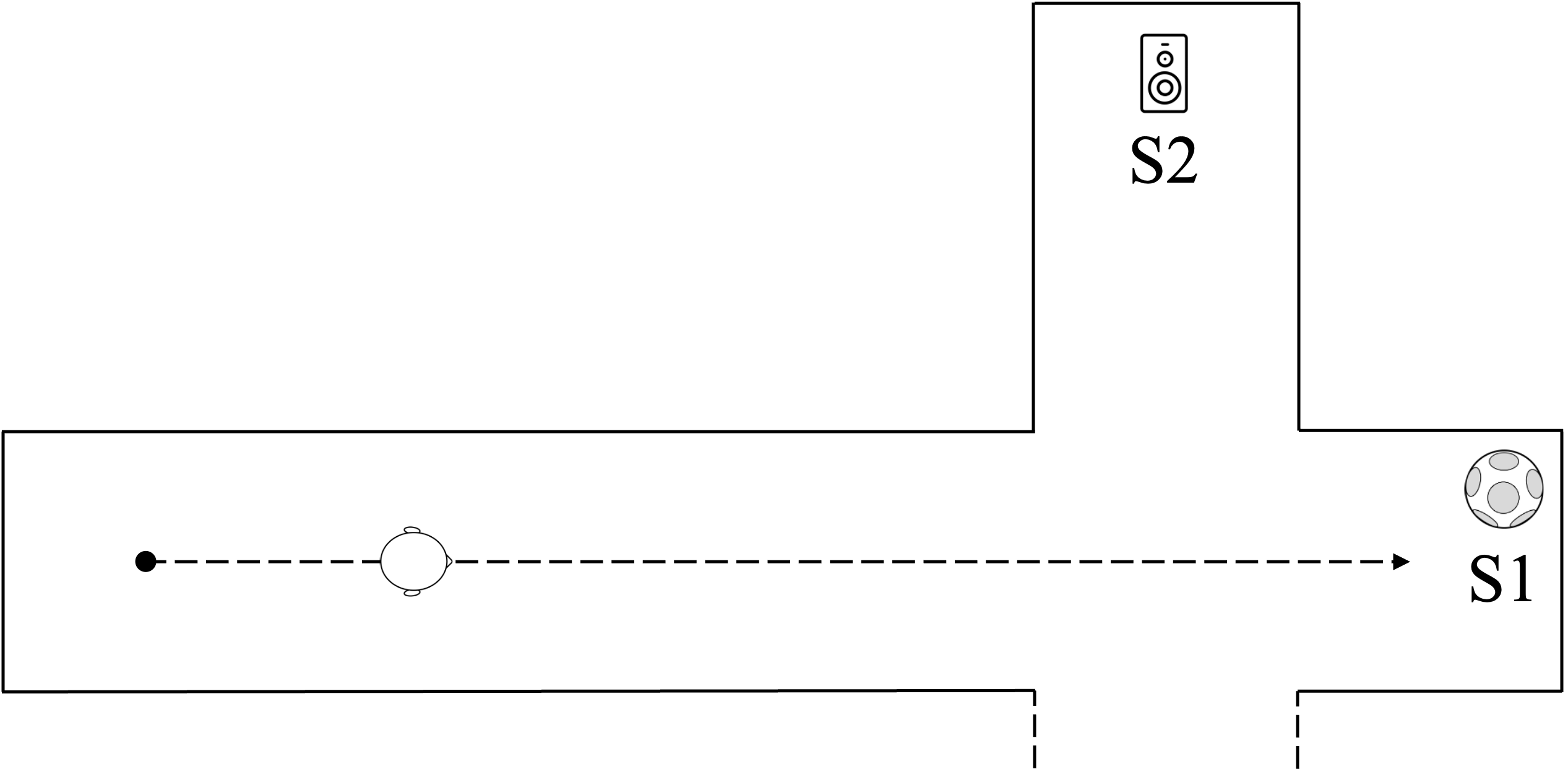

The targeted navigation scenarios were large spaces such as museum or city halls. To test workflows and identify the acoustic cues blind people relied on during navigation, we started small however. We thus selected a range of archetypical interior navigation scenarios (corridor, intersection, etc.) for the first recording campaign, measuring RIRs along predetermined navigation paths.

We did the measurements using a Neumann KU-100 dummy head for binaural RIRs and an EigenMike for 3rd order ambisonic RIRs. We placed small loudspeakers where users feet, cane and hands would be, to be able to auralise footsteps, cane taps and finger snaps.

RIRs analysis and listening tests helped us better understand the conditions under which auralisations provided valuable layout information (type of sounds, spatial sampling, interface, etc.). The tests were conducted with a panel of blind people that worked with us during the entire project.

Measuring the final scenarios

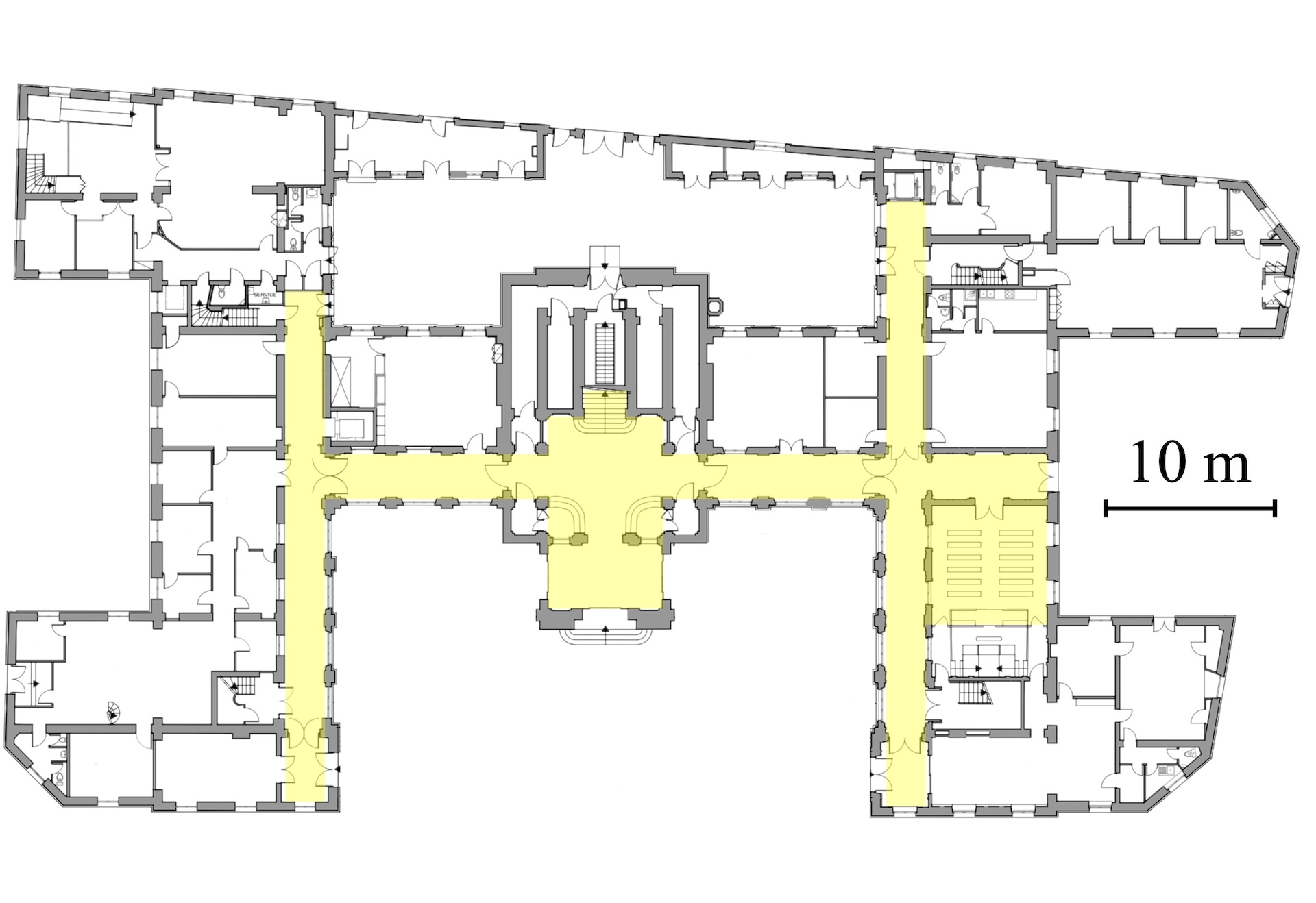

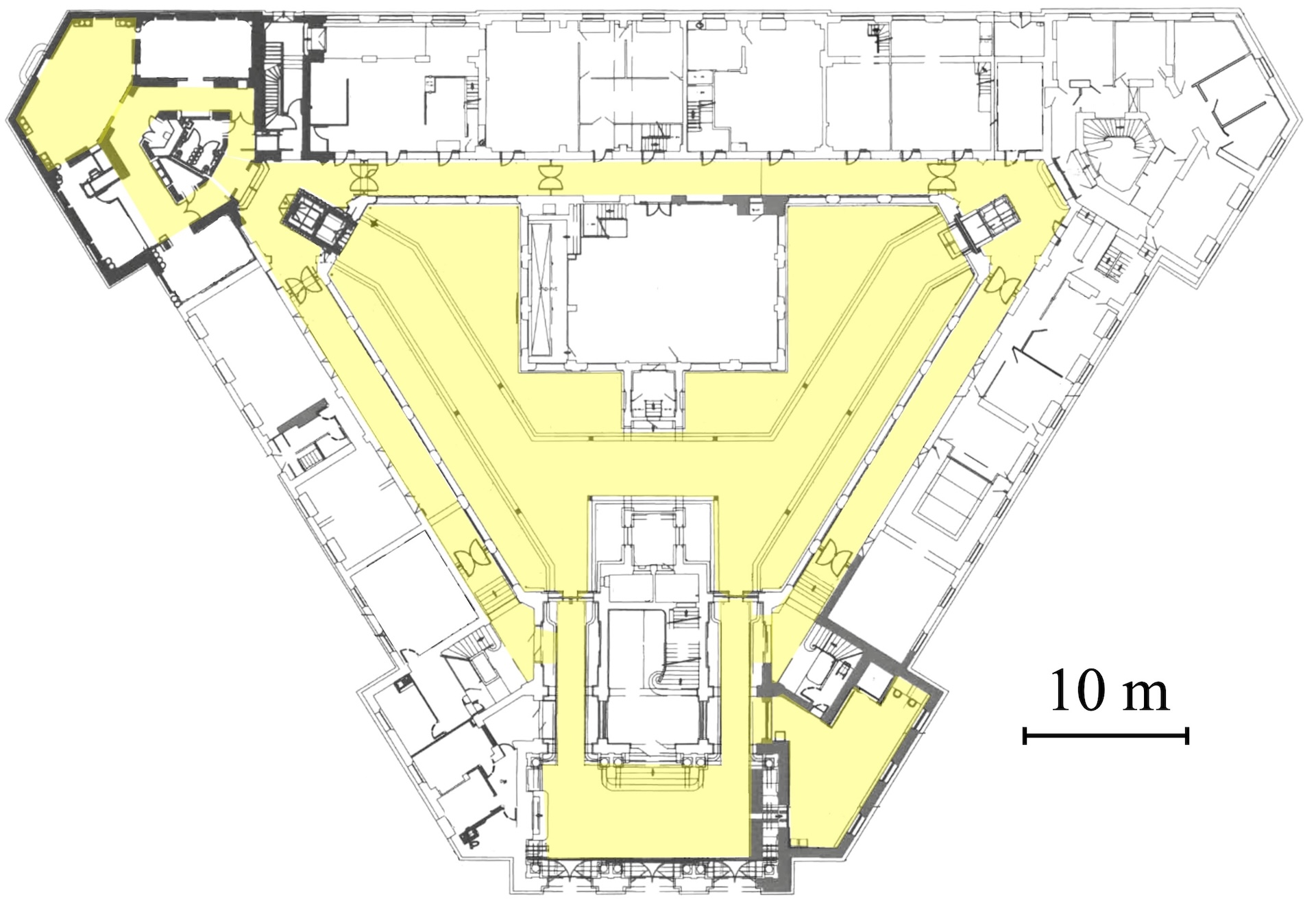

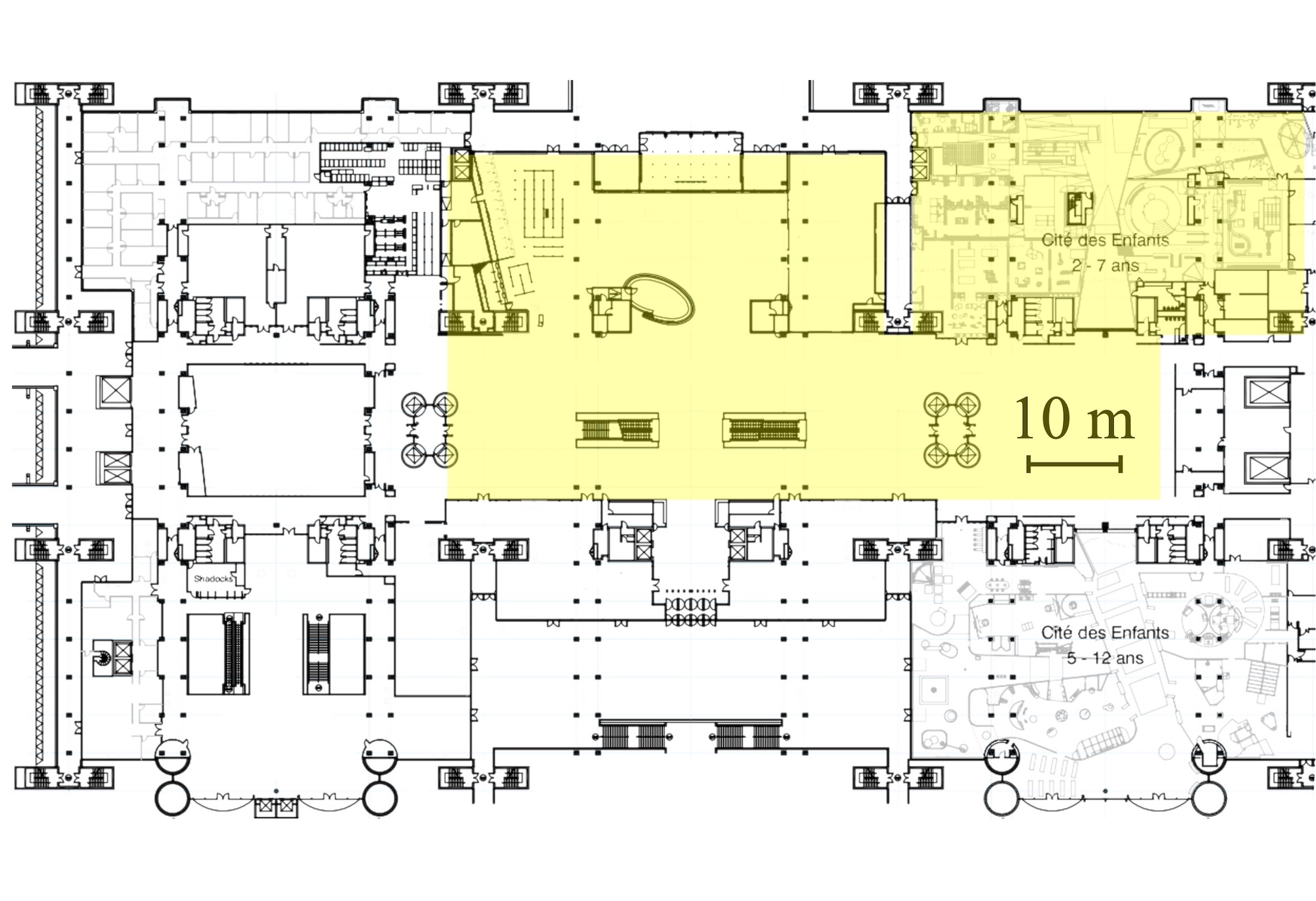

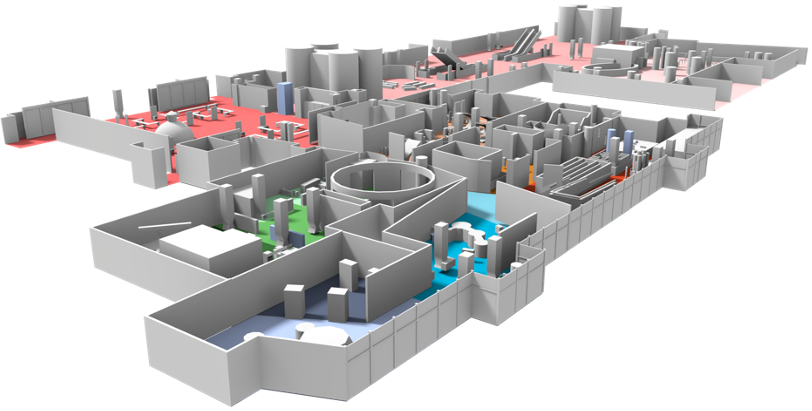

We selected 3 large buildings to serve as sandboxes for auralisation and navigation tests, presenting increasingly challenging navigation layouts: the city halls of Paris 3rd and 20th districts, and the “Cité des sciences et de l'industrie” museum in Paris.

In each building, we conducted RIR measurement and ambience recording campaigns to be able to generate various auralisation scenarios. Highlighted in yellow below are the areas covered during these campaigns.

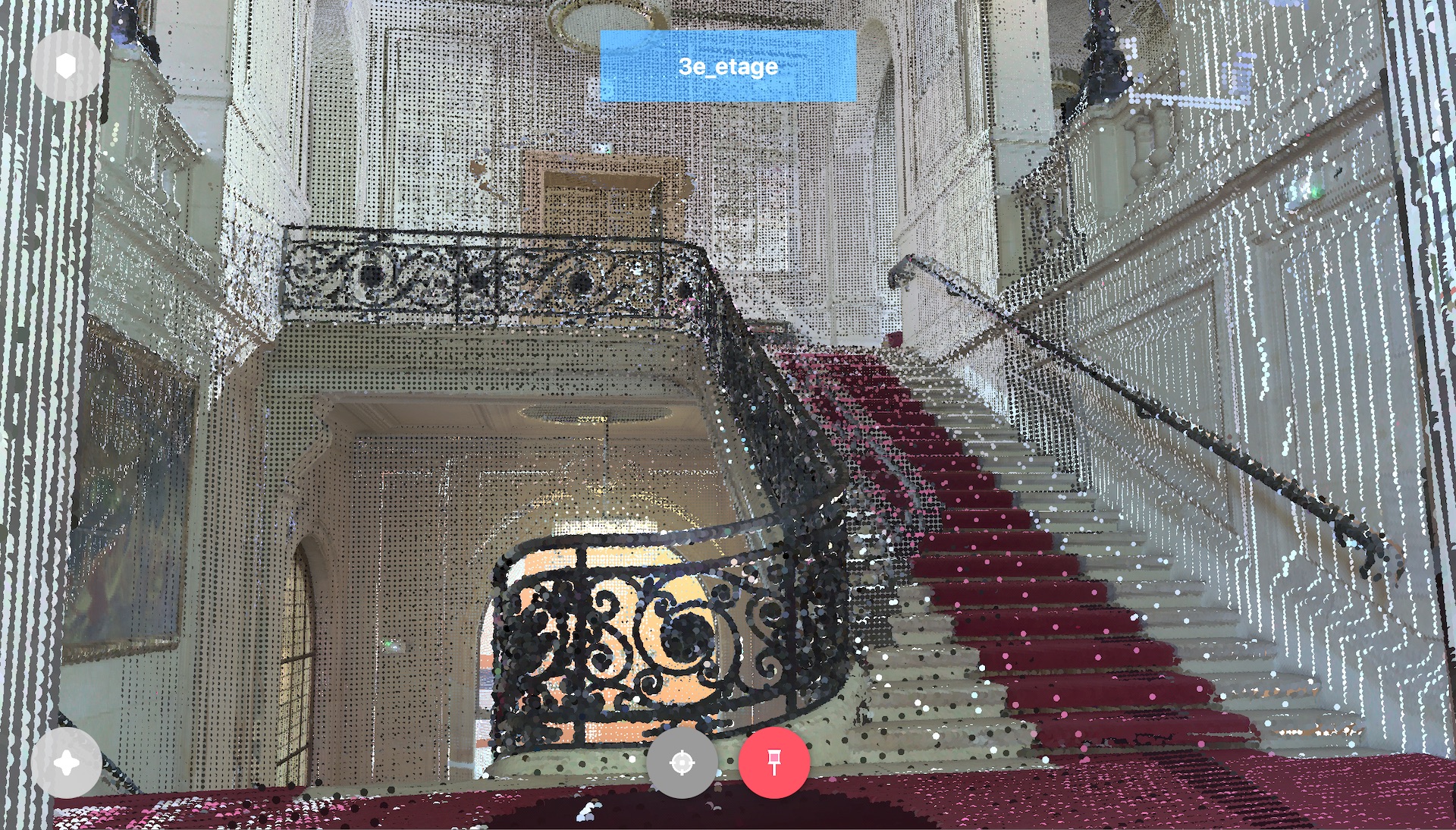

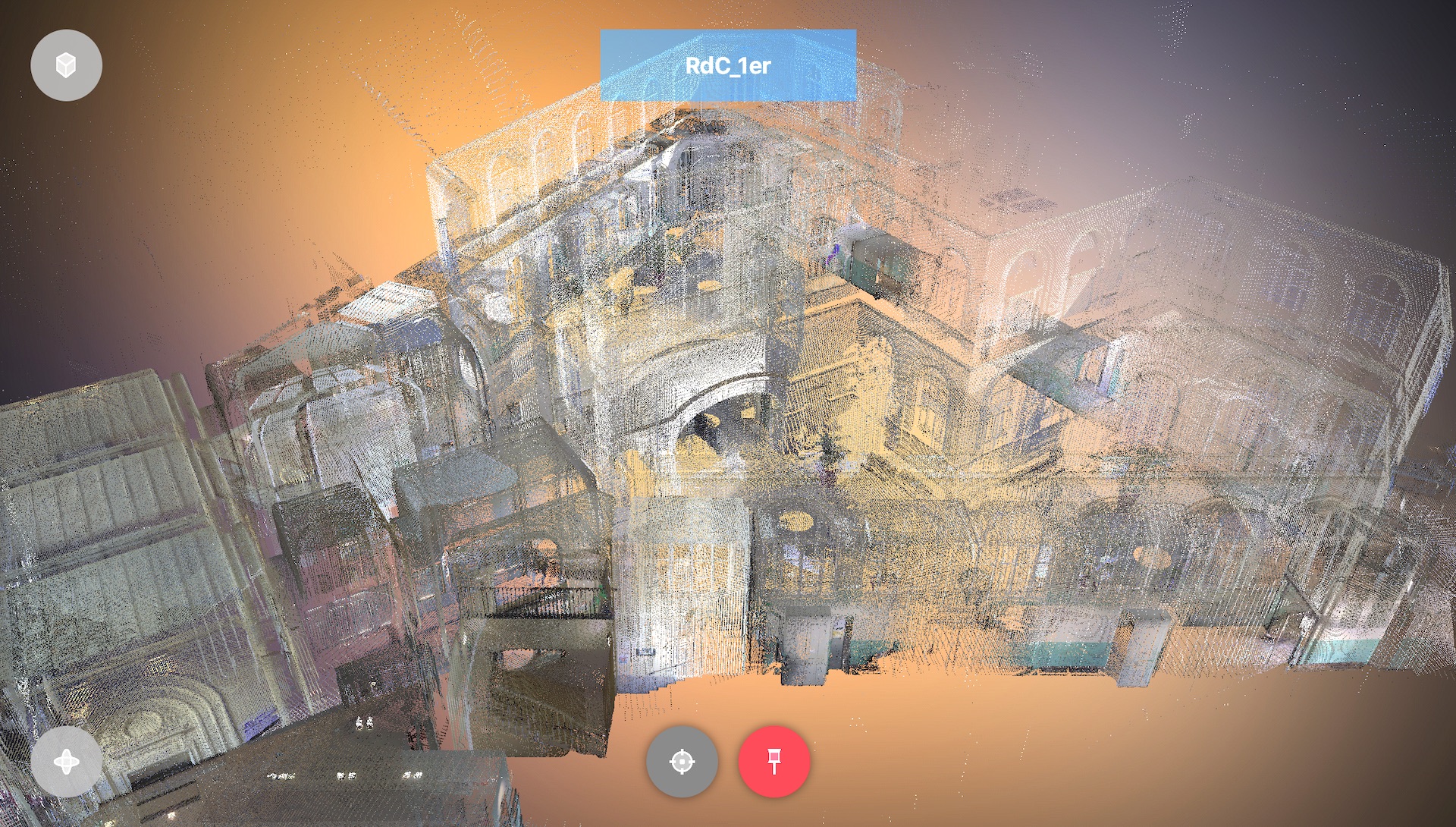

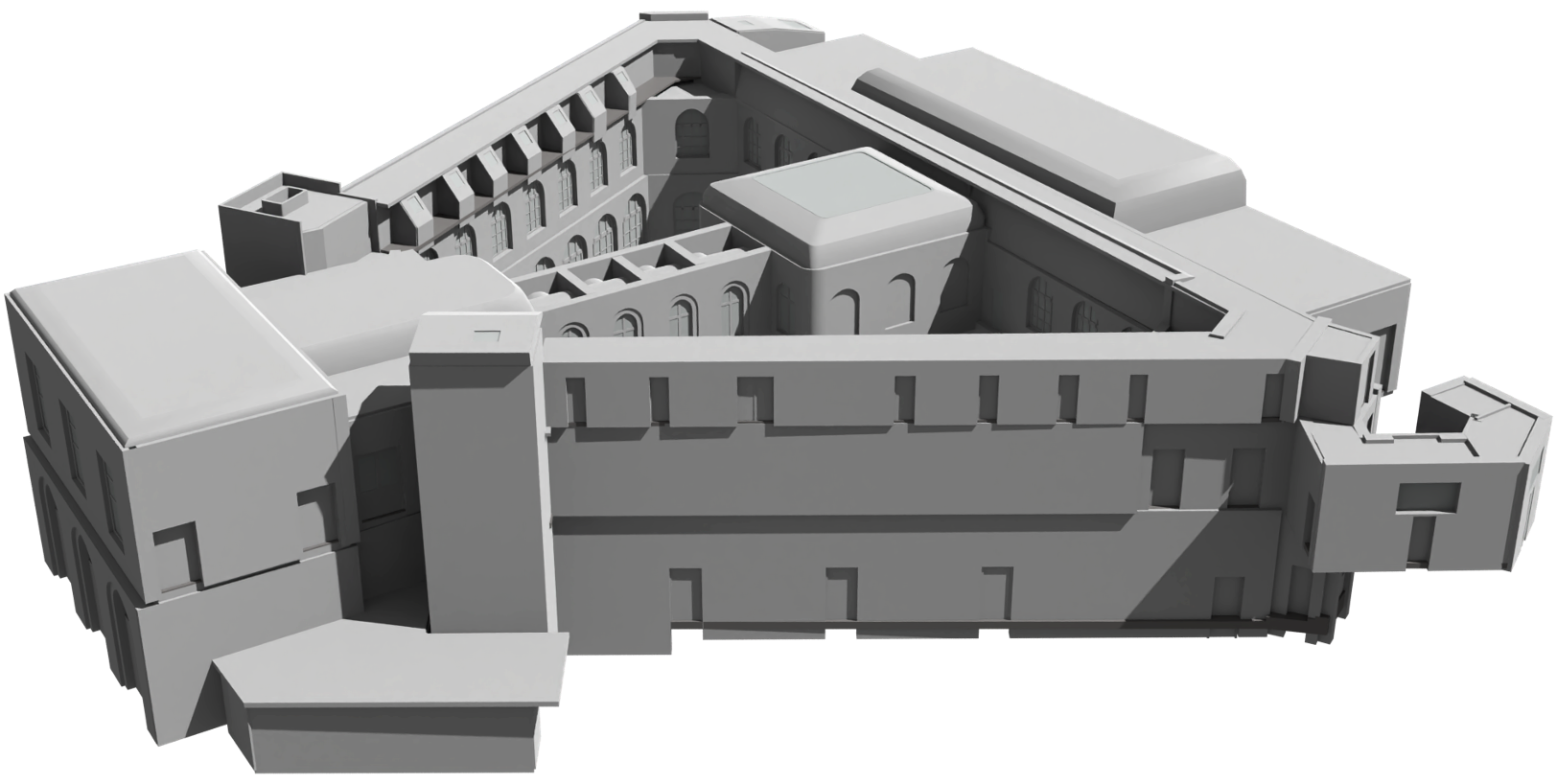

Beyond RIRs and ambience, we used a LiDAR scanner to aggregate a point cloud of the interior of each building.

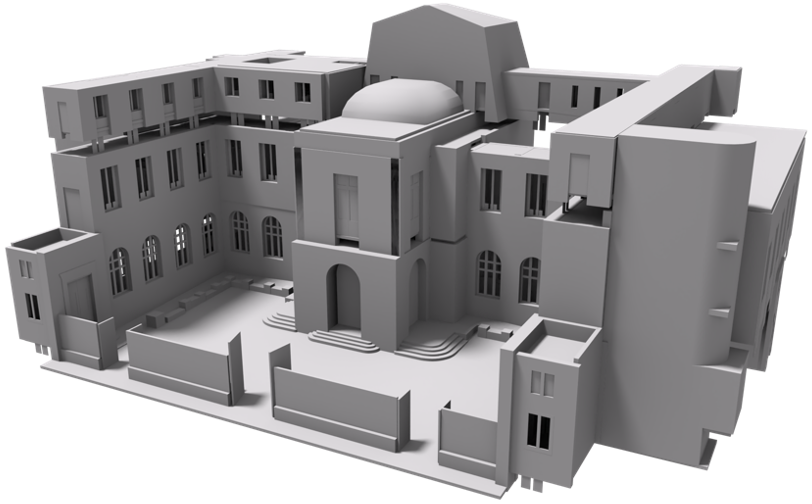

We then used these point clouds as a basis to create 3D acoustic models of the three buildings.

The plan was to use these models to generate auralisation scenarios with full control on source nature and positions compared to the ambience recordings.

Auralisations

We tested three different workflows to create auralisations: mixing direct ambisonic recordings, using measured RIRs with convolution, and using simulated RIRs with convolution. Simulating test scenarios was relatively straightforward, but large real-world scenarios proved much more challenging. Pressed for time, we ended up using only the first two workflows for those.

Interface

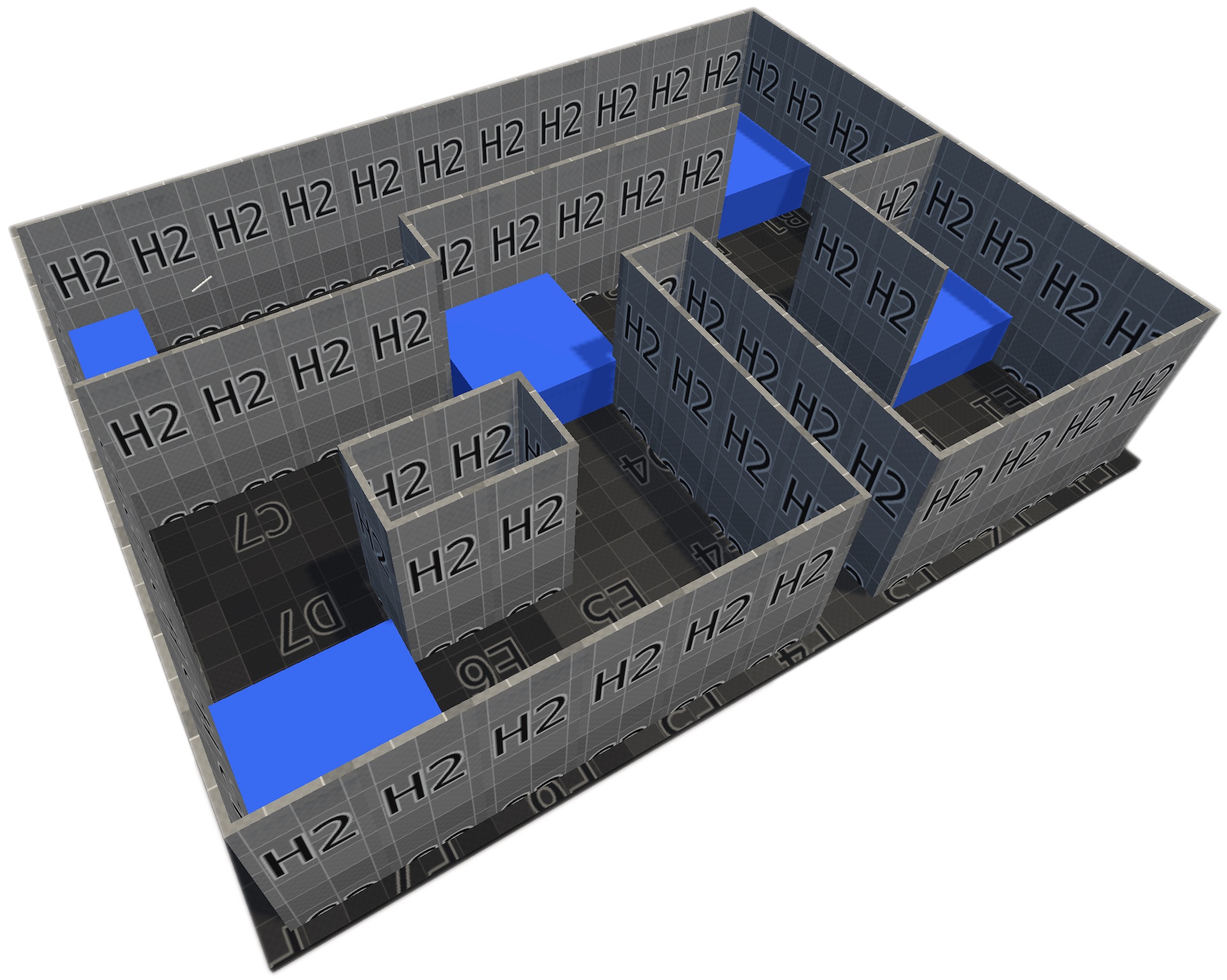

We still needed an intuitive interface for blind users to explore our scenarios and get the most out of the auralisations. We explored two main approaches: first-person exploration in VR and augmented tactile maps. To prototype the VR exploration, we built a few procedural mazes to test user locomotion and obstacle detection.

Example procedural maze with sound zones

While we did find some strategies that worked, it quickly became obvious that first-person exploration wasn’t ideal during the initial exploration phase. Just like sighted users often consult a street map before dropping into street view to plan the last stretch of a route, blind users were far quicker at grasping a layout from a high-level perspective, i.e. using a tactile map.

Early VR interface exploration

Switching to tactile maps, we started with a 3D-printed map augmented by an OptiTrack system. The tracking setup sent the user’s finger positions to a Unity scene, which acted as a scene graph and triggered ambient playback in Max/MSP. It worked well, technically speaking, but was bulky to move and required blind users to come to the lab for testing. Also, the ambiance triggering was far from intuitive as it didn’t provide any tactile feedback to indicate what location was currently active.

Augmented tactile map v1

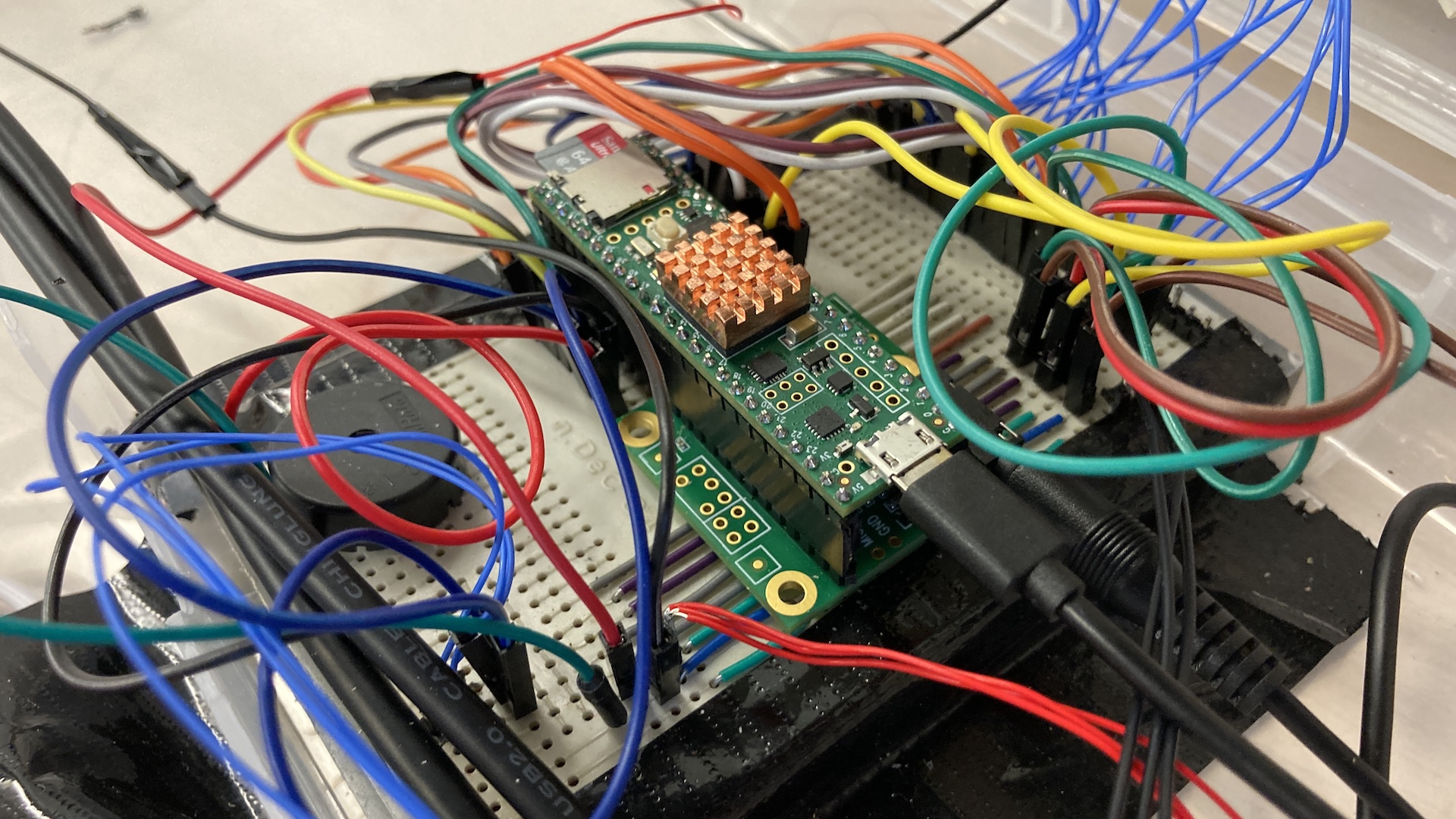

So we built a v2. Using the same 3D-printed map, a drill, a few buttons, and a Teensy, we designed a new version shaped like a suitcase: portable, and much more intuitive. Users can press buttons at various points to hear different ambient sounds while exploring the map.

Designing augmented tactile map v2

In collaboration with the Federation des Aveugles de France, we ran a study with this prototype to better understand which types of feedback were most informative during exploration—comparing and combining ambient cues, localized cane taps, and verbal descriptions.