Oculus Project (2019 - WIP)

In collaboration with the Facebook Reality Labs, the overall project investigates how far we need to push binaural audio rendering quality in VR and AR for players to get life-like reactions for various types of games and applications.

Get everything up and ready

The first task ahead was to prepare the room in which the experiments would take place. But first it's Christmas time..

Oculus CV1 headset unboxing

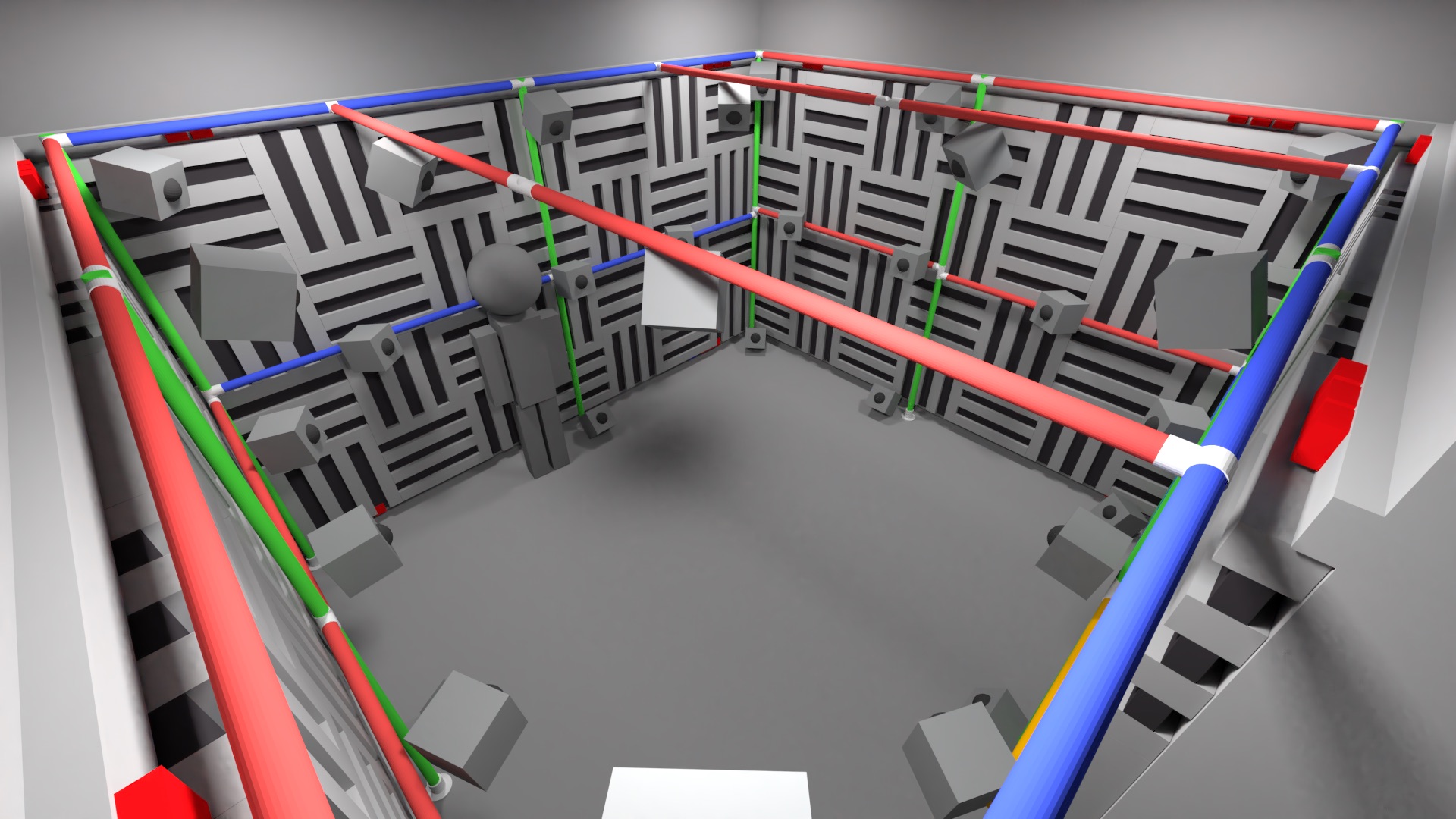

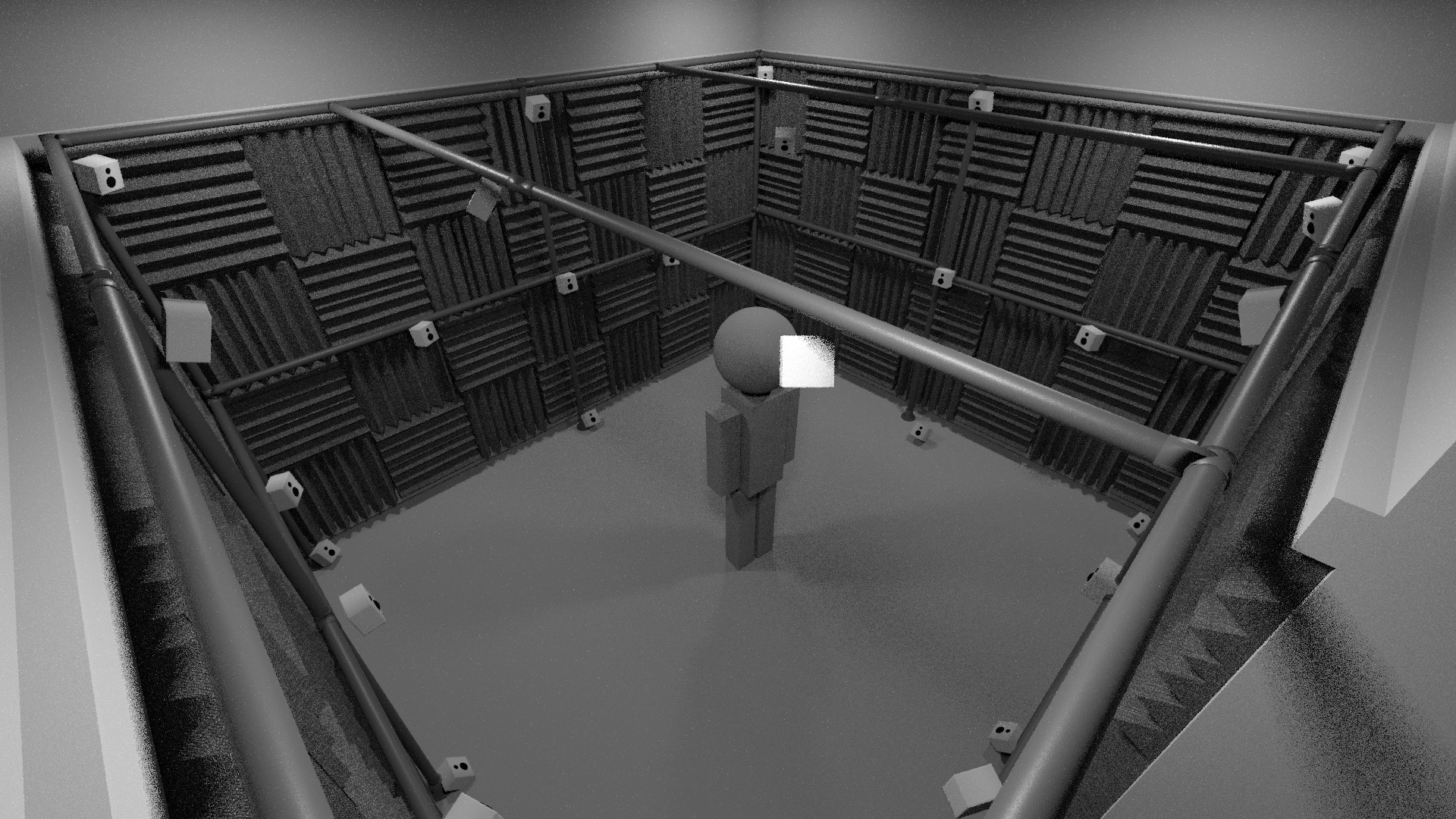

The room was designed to be dry enought to overlay any type of real / virtual acoustic, with a mouting structure to hold loudspeakers, tracking cameras and other VR/AR related material.

CAD of the VR/AR Room

MocapVR room completed

Are individualised HRTF worth it in a gaming context?

The goal of this experiment was to assess the impact of individualized HRTF in a VR game where. HRTF individualization can roughly be seen an adaptation of the audio rendering to players “listening profile”. With individual rendering comes i.e. an improved capacity for audio source localization. The drawback is that establishing a player's profile is sort of complicated at the moment. The goal of this experiment was to check whether this individualization is worth it, and if so for which level of gameplay, as there's no real need for high speed and accurate localization when everything is moving so slow you can randomly shoot and still get away with it.

In-game capture of the HRTF individualization experiment

How good can we get training with a non-individualized HRTF?

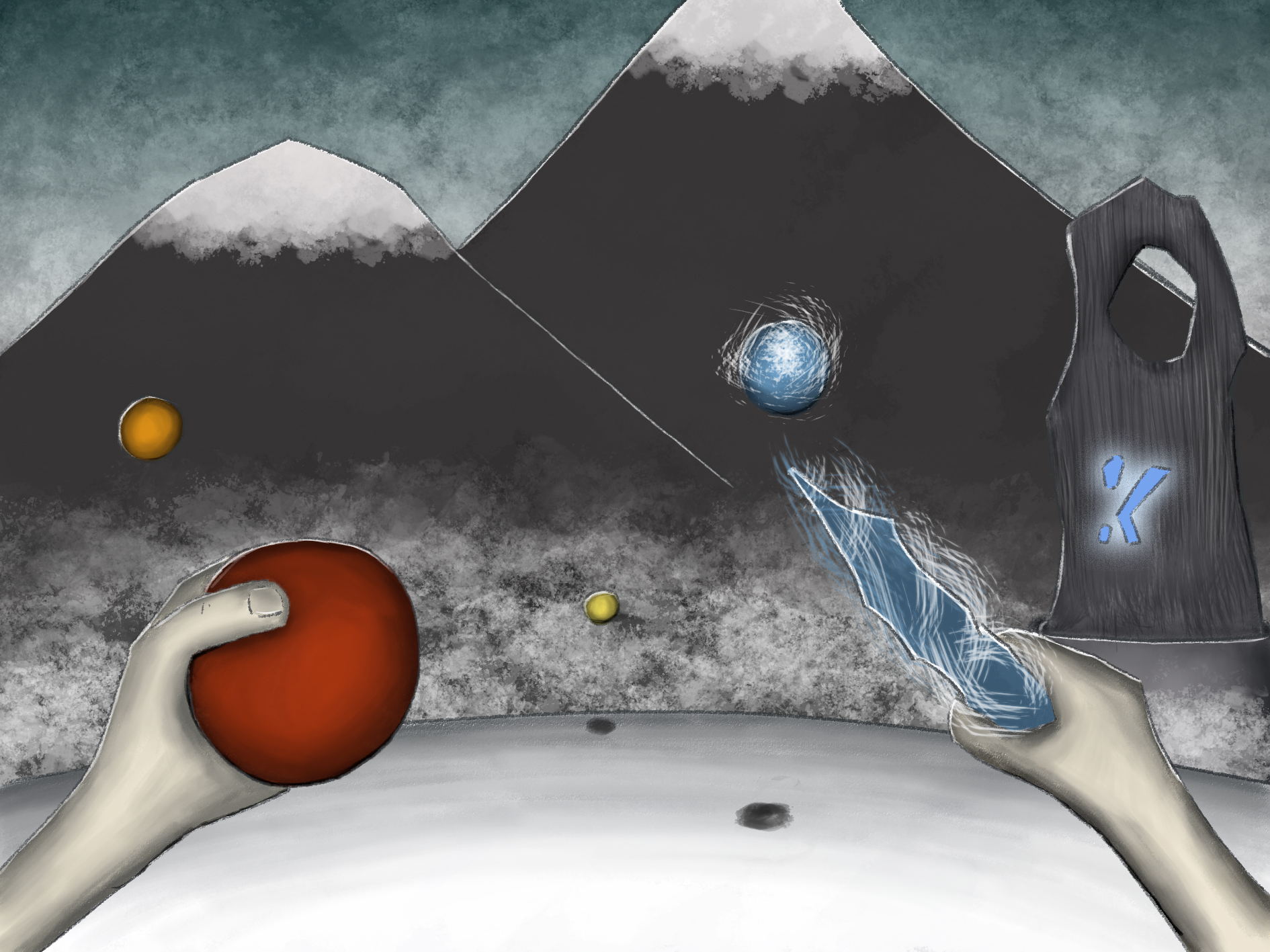

The goal of this experiment was to design a VR game to accelerate HRTF selection and learning. The first design proposed was based on a harvesting mechanism, coupled with throwing things here and there to score points. The harvesting forced players to pay attention to audio-visual targets of known position in space (hear it spawn, check where it is, continue playing while it ripens, harvest it before it goes bad). The throwing things, along with a few other game-play induced player moving audio sources around their head, was supposed to further help with the learning (proprioception).

HRTF training experiment v1 storyboards

HRTF training experiment v1 in-game video

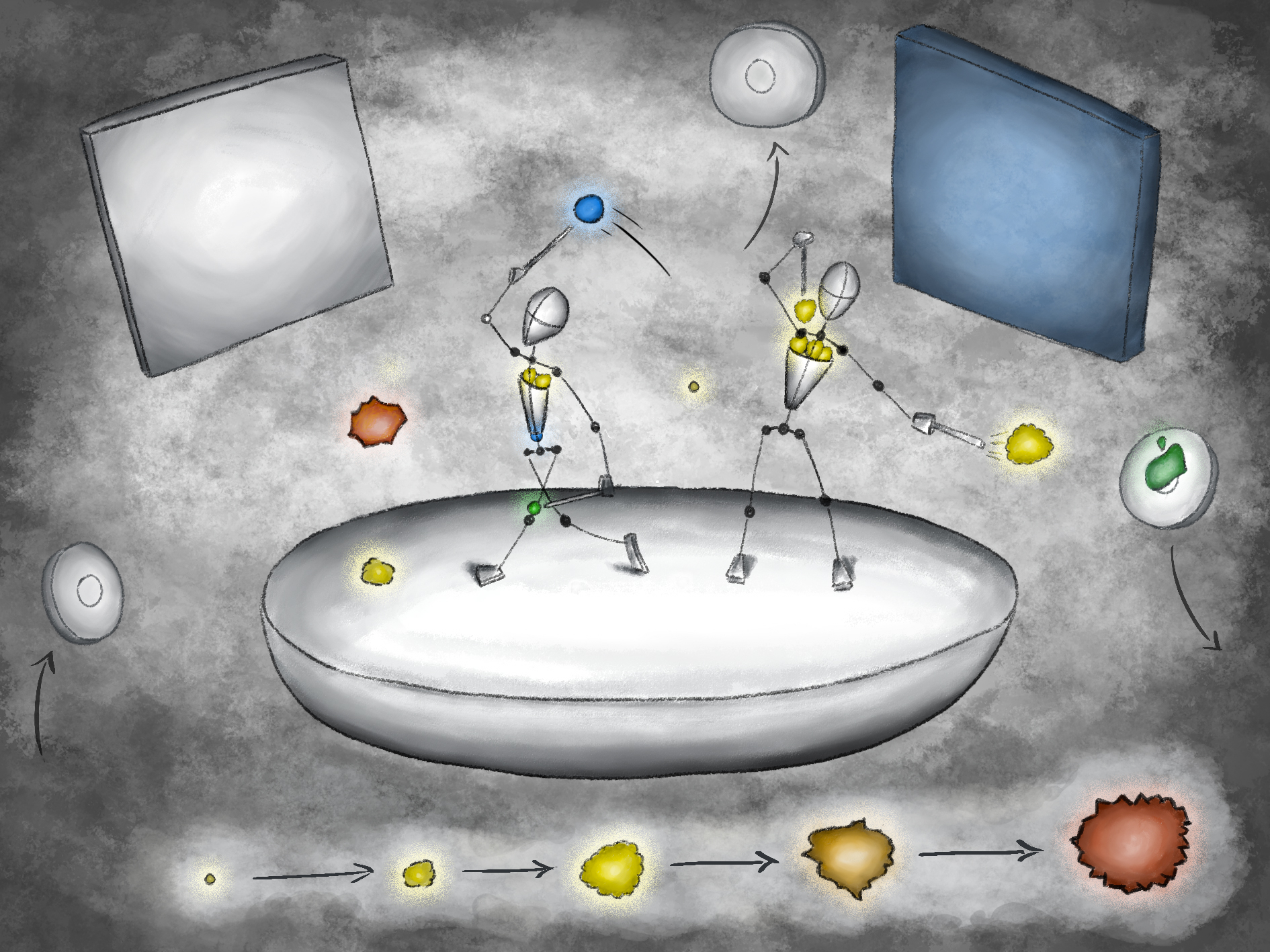

The first design was finally discarded. Beta tests showed that not only participants had a hard time understanding the game mechanics, but that playing it did not result in any substantial HRTF-wise improvement (i.e. audio localization accuracy). A second version was designed, switching gears: if the first implementation was a game turned into a learning experience, the second was a learning experience made into a game. We listed all the known problems of non-individualized binaural rendering (localization-wise), and created learning scenarios to expose and overcome them one by one. This new design capitalizes on skill learning itself as an incentive, presenting participants with a tool that we ourselves would want to use to quickly learn a new set of ears.

HRTF training experiment v2 in-game video

Keynote of the HRTF training experiment (GameSound conference 2020)

Oh, and by the way, it's Christmas again.

Oculus Quest 1 and 2 headsets unboxing

Towards Augmented reality

Investigating spatial sound perception in AR, we started started using more and more scenes composed of both virtual and real sound sources. As such, we needed to assess if wearing an HMD impacted participants ability to localize real sound sources.

Participant during the experiment on the impact of HMDs on audio localization