Cloud Theatre (2018)

This project is in the same vein as the Ghost Orchestra: recreating the acoustic of an existing architectural design and experience it in VR. I had roughly a month to work on that one. Let's start with week 0 (timing is not really accurate. Indulge me, I wanted to do it blog-like for once).

Project home page and BNF virtual exposition

Week 0: Project Description

Cloud Theatre is to take place in a virtual reproduction of the the Athénée Theatre (Paris). 2 actors are to be filmed and audio recorded to end in the 3D model, both as visual avatars and audio sources. On the top of it all, the acoustics/visuals of the theatre are to be reproduced for different periods (today, back in the 90s before the last renovation, and in the early days of the theatre). We start by getting our hands on a rough model of the Theatre, the very same we used in a previous BGE-based point cloud project.

Previous use-case of the Athénée model: BGE based point-cloud rendering

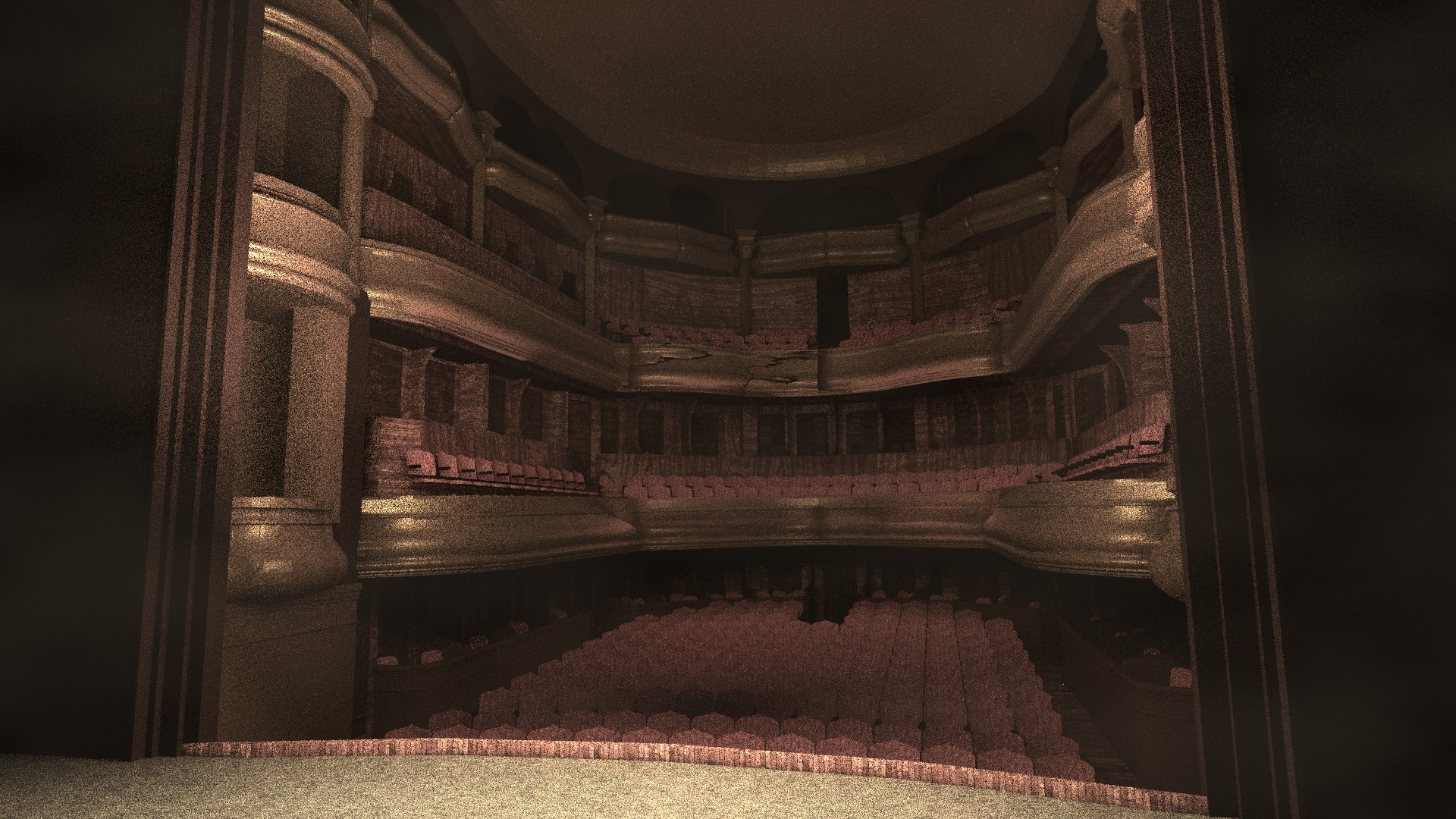

Bart starts getting through the pain of checking the archives and creating Geometrical Acoustic model (a 3D mesh with acoustic material information) to simulate the auralizations for the three periods (changing seats here, modifying the orchestra pit there...). Thank you Bart :). On my side, I start texturing the model and try my hand at Blender Cycles. First impression? still work to do..

First Cycles render attempt

Week 1: Getting my bearings in Cycles

I won't comment much on the hassle this has been, but a hassle it was.. Texturing, modeling, re-modeling because the topology was just not adapted. Needless to say, I spent an awful lot of time listening to Price's tutorials :). Thank you Adrew.

Getting to know Cycles

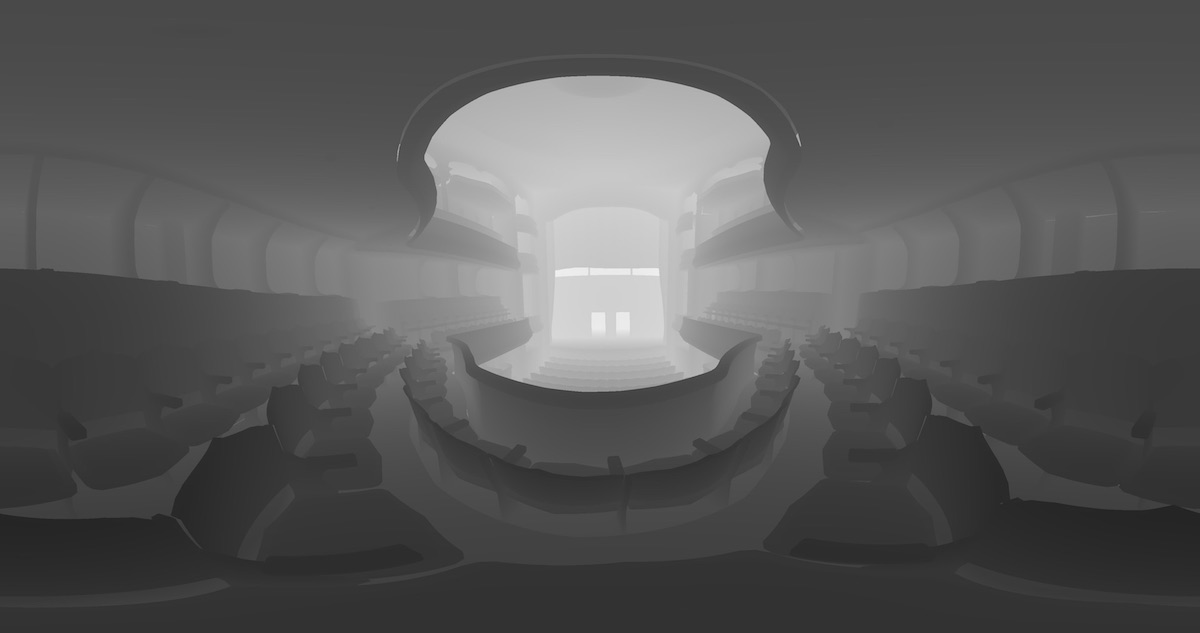

Didn't I tell you? Brian, the project director also wanted a 360° rendering of the whole thing, for “you need only to tweak that camera thing no? cool, do it.” (love you Brian).

360° (equirectangular) rendering depth map

Why this last image is in B&W? that's for week 2.

Week 2: Crowd Master and compositing

Brian figured that at some point, we would have actors, theatre, ... and a lot of empty seats. Would not do, at all. The problem was, animating one dude is fun, 150 of them less so. We needed to go procedural on that one, and that's where CrowdMaster came in. The whole thing is still under developments, but clearly reached a stage where one can rely on it for audience animation. Choosing a decent character (no time to design a proper set at this point), and here we went trying different types of animation and NLA distributions...

and we're good to go.

Spectators in the theatre

Week 3: Getting the cloud in the theatre

That one was quite a pain to get right (did we ever though..). We wanted to use the actors video recording directly in the model, not relying on rigs and animation to recreate the play. The look and feel of the engineers in Prometheus was pretty much the target there. Based on the Kinect2 camera recordings (using libfreenect2), the plan was to use the depth video as a depth map for the point cloud, and fill in the texture with the RGB video. After a series of trial and errors, we finally got the look we were after

To achieve the final point-cloud look, I used displacement on subdivided plane (screen), parent of a sphere/plane (pixel), dupliverted with RGB texture applied based on object space. I finally used a deform mesh modified to apply the pyramid like projection cone to make sure actors' size remain constant when they moved between foreground and background. I pushed a Github repo with a basic Cycles point-cloud project for reference.

Cycles point-cloud evolution

Week 4: Final rendering and integration of the auralization

Audio, visual, animation, spatialized public noise... putting everything together and the play goes live. The following video is still a work in progress, but gives a rather good idea of what the final rendering should look like.

Visual rendering, mid row

First release of the Cloud Theatre

And the equirectangular / 360° version with 2nd order Ambisonic to binaural rendering for 2 different positions in the theatre:

360° (equirectangular) rendering: last row, high lights