Louvre (2023)

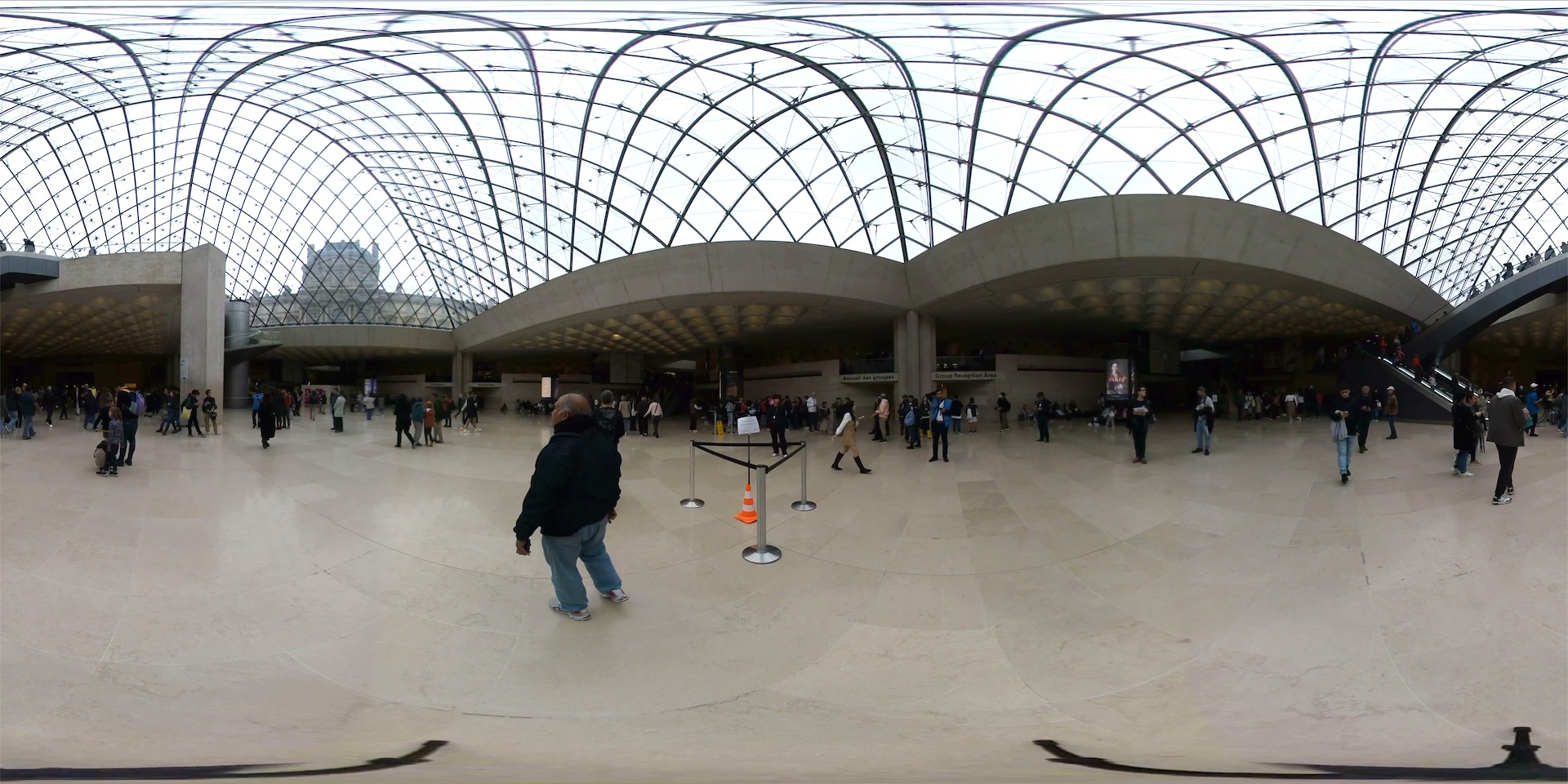

At the core of the Louvre project was an immersive auralisation of the Napoleon Hall, the large underground reception area beneath the Louvre pyramid. The project combined field recordings, virtual modelling, and spatial audio to support acoustic design decisions in a sensitive heritage environment.

Setting the scene

Napoleon Hall, though visually striking, suffers acoustically when crowded. Visitor noise levels often reach disruptive thresholds, making the space challenging for both staff and guests. Our goal was to propose and evaluate acoustic treatments that would improve the experience without compromising the hall’s architectural integrity, a delicate balance given the space’s iconic status.

On-site measurements

We started with an extensive field campaign. During opening hours, we recorded typical ambience using ambisonic microphones at four key positions: entrances, centre, and near the reception desk.

Ambience recording session

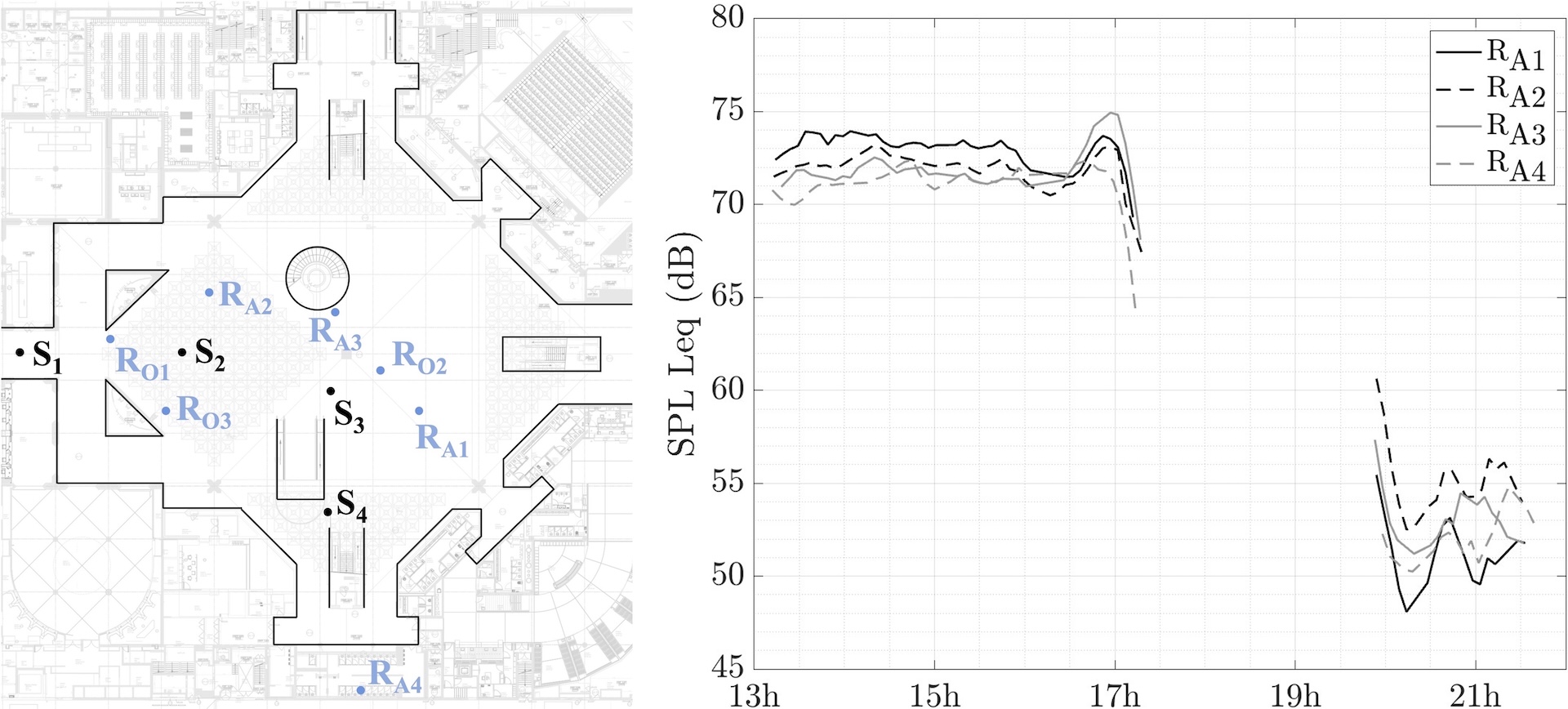

In the figure below, RAi are 1st order ambisonic microphones used for ambiences. ROi and Si are omnidirectional microphone and source positions used during the RIR recording session.

Ambience and RIR recording sessions + SPL in the Hall during the day

At night, with the escalators and ventilation system shut down, we captured impulse responses using a sine sweep method, along with background noise levels. This dataset would serve as the ground truth for calibration.

RIR measurement session

Building the acoustic model

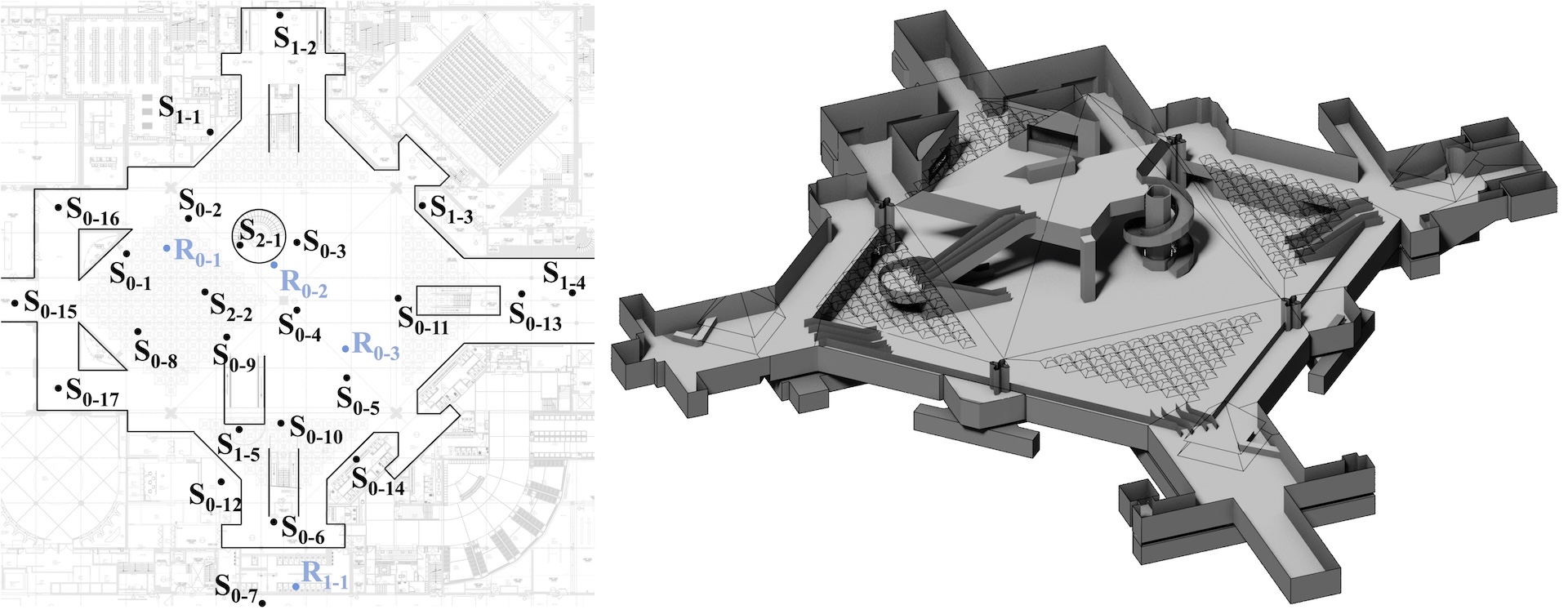

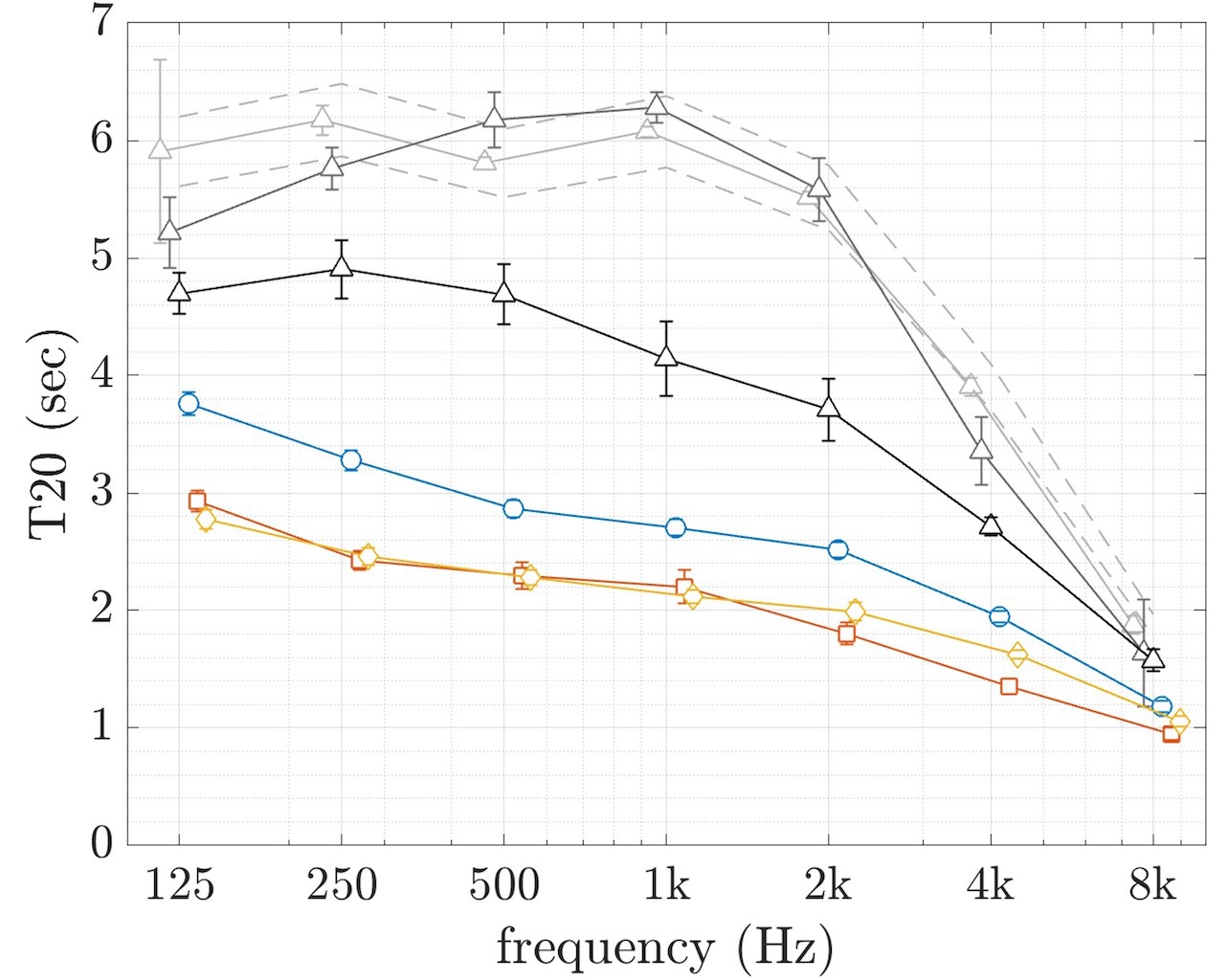

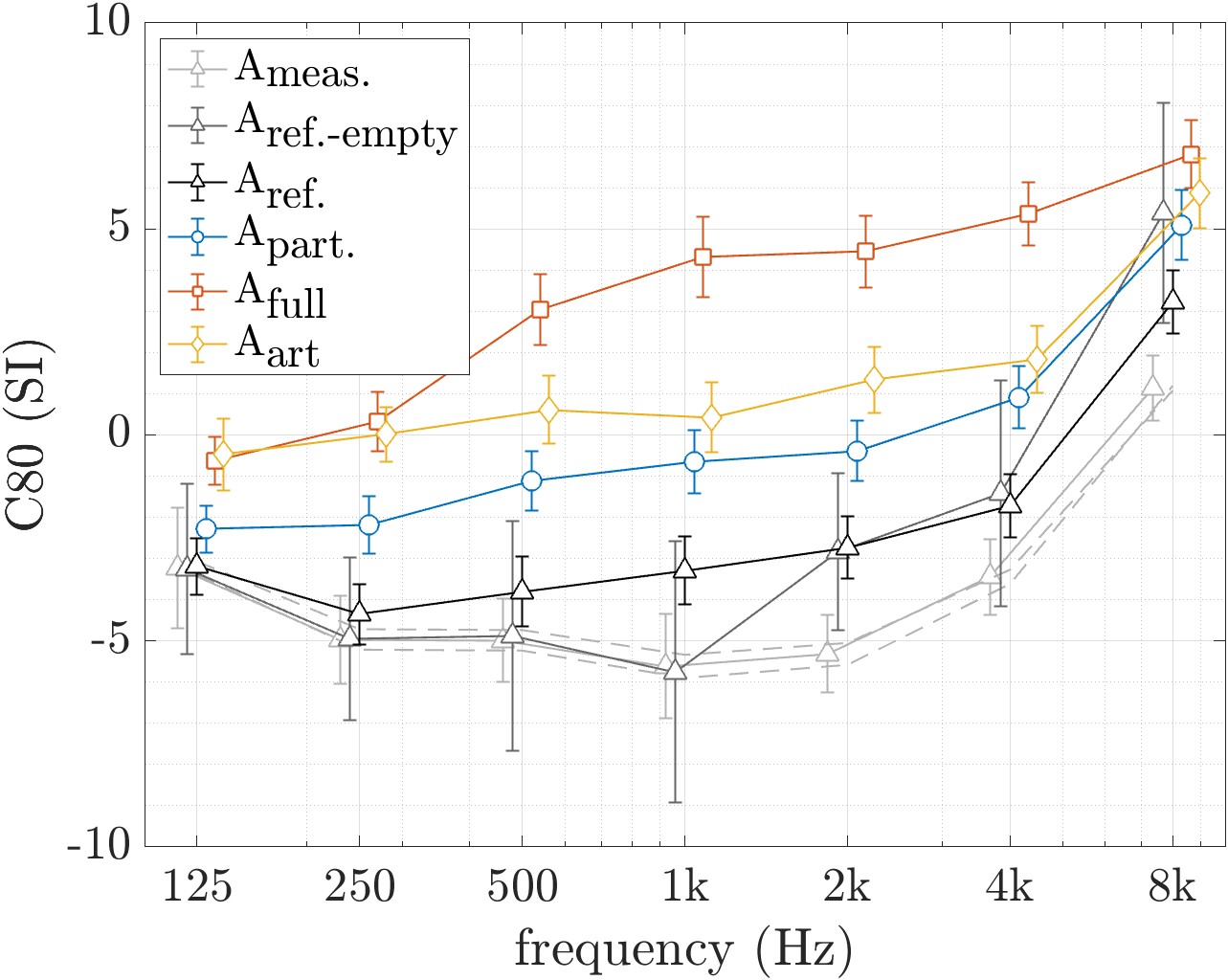

Back in the lab, we created a 3D model of the hall in Blender, simplifying architectural drawings into a low-poly mesh compatible with CATT-Acoustic. Each surface was matched with material properties derived from field observations. We then calibrated: iterating absorption and diffusion values until the simulated T20 and C80 metrics aligned within perceptual thresholds of the measurements.

Acoustic model and simulated source/receiver positions

Treatment scenarios

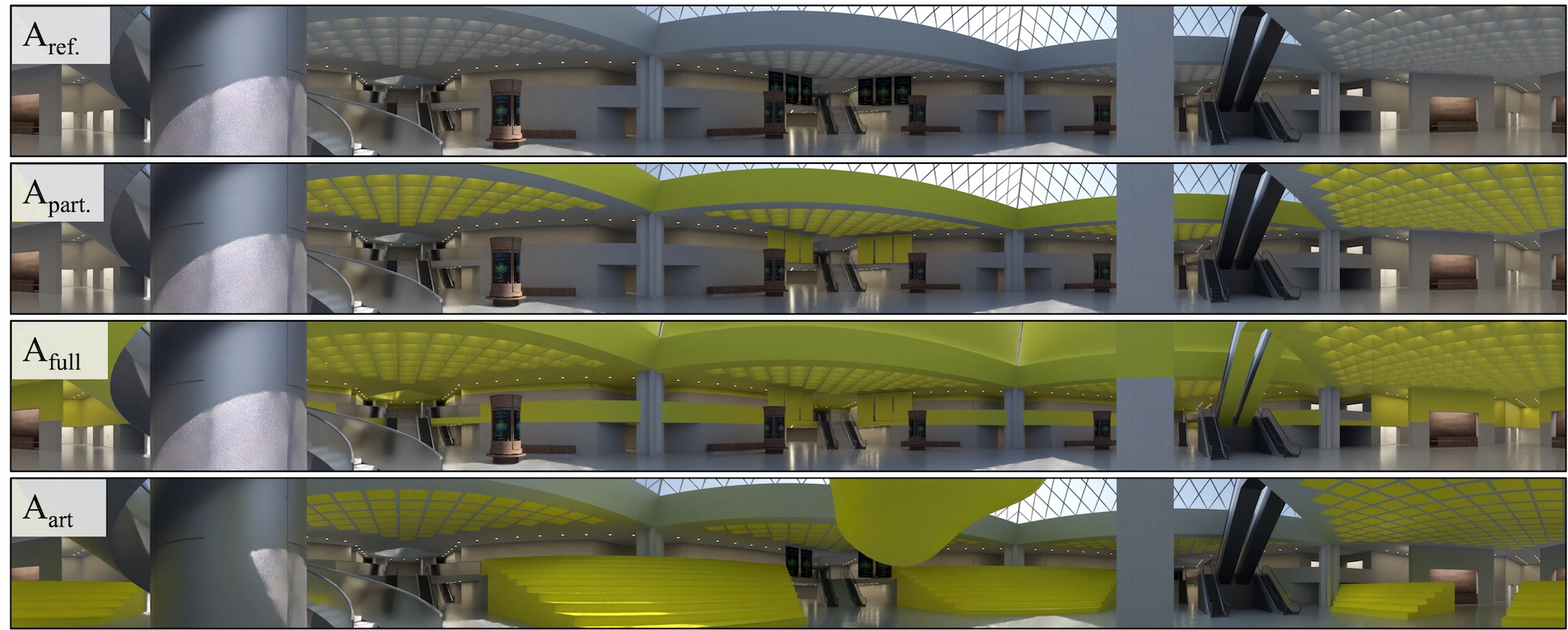

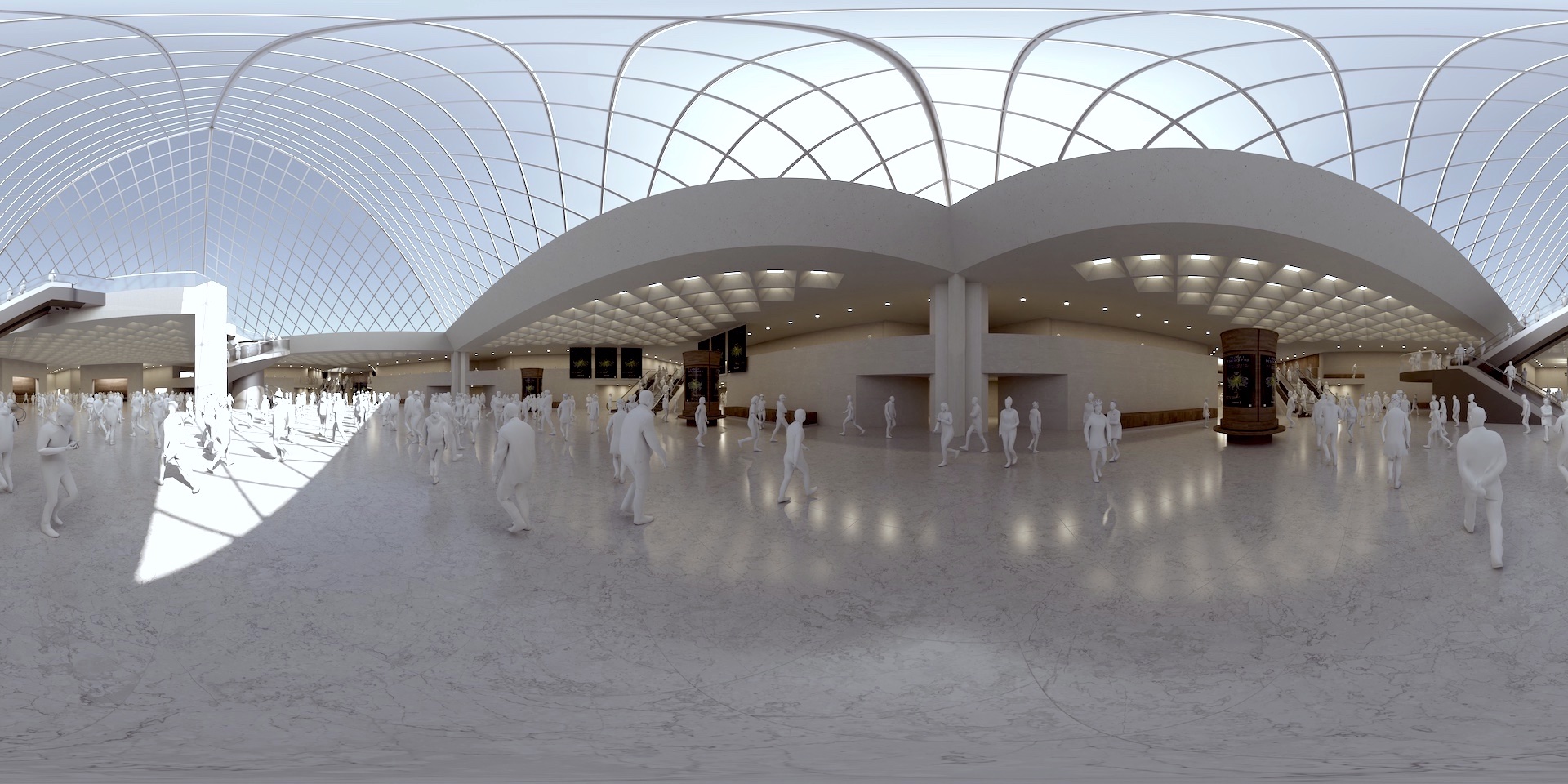

We evaluated three main treatment strategies, plus the untreated baseline. These were visualised in the 3D model using yellow highlights to denote modified surfaces.

Visual rendering of acoustic treatments

- Aref.: the current untreated state.

- Apart.: partial coverage with acoustical plaster on non-accessible concrete surfaces.

- Afull: full surface treatment including micro-perforated film on the glass pyramid.

- Aart: an alternative approach using acoustic lighting, a central art piece, and wooden bleachers.

Acoustic parameter estimations on simulated RIRs, comparison with measured RIR

Rendering the experience

To explore the impact of these treatments, we developed 360° auralisations. For each treatment, we simulated 96 RIRs, convolved them with dry speech, crowd, and background sources, and rendered the results as second-order ambisonics.

Raw RIR auralisation (binaural)

Ambience auralisation: recording (binaural)

Ambience auralisation: simulation (binaural)

The auralisations were paired with panoramic images rendered in Blender. The virtual scenes were populated with procedural crowds, matched in density to real conditions.

360° reference photo

360° rendered image

VR application

The final step was to wrap everything into a VR application, built in Unity, running on a Quest 3.

Each position showcased a different treatment, allowing users to switch between soundscapes: crowd noise, announcements, or both. A 360° banner on the lower part of the screen showed which treatment was active at all times.

Louvre auralisation VR application

Demo day

At the end of the project, a delegation from the Louvre visited the lab. Demos began with a playback of the Hall’s ambience recordings to refocus on the problem, followed by the simulated soundscapes on the same 3rd-order ambisonic setup to validate their realism. Then came the VR tests: each visitor explored the acoustic treatments firsthand before sitting down to discuss which option to move forward with.